Cognitive Computational Neuroscience 2019 - A Mini-Report

Published:

TL;DR: This blog post provides an overview of trends & events from the Cognitive Computational Neuroscience (CCN) 2019 conference held in Berlin. It summarizes the keynote talks and provides my perspective and thoughts resulting from a set of stimulating days. More specifically, I cover recent trends in Model-Based RL, Meta-Learning and Developmental Psychology adventures. You can find all my notes here.

My fascination for computational neuroscience first got sparked (![]() ) after realizing the beautiful bilateral relationship between better understanding computation in the brain and improving upon the state of statistical learning.

) after realizing the beautiful bilateral relationship between better understanding computation in the brain and improving upon the state of statistical learning.

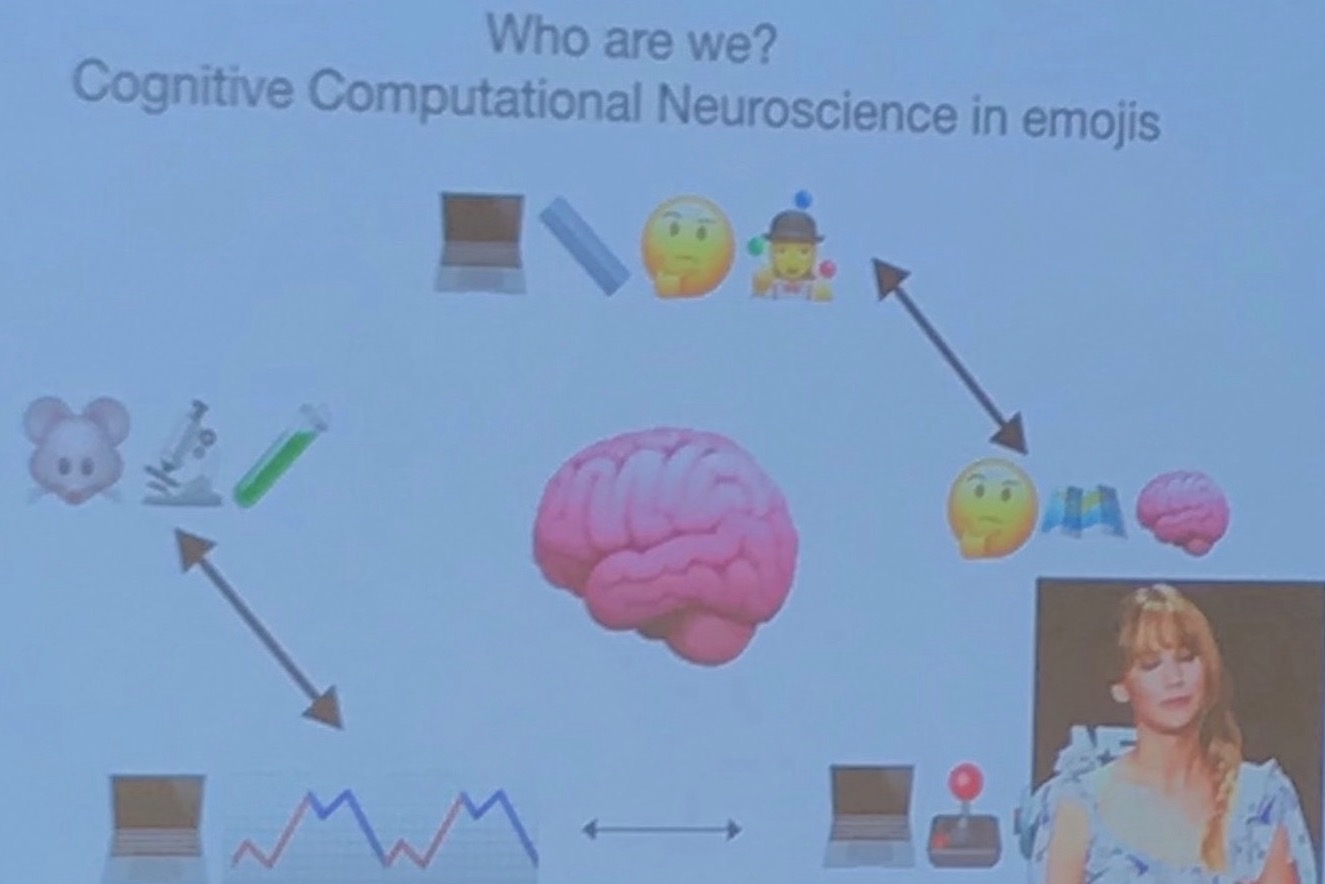

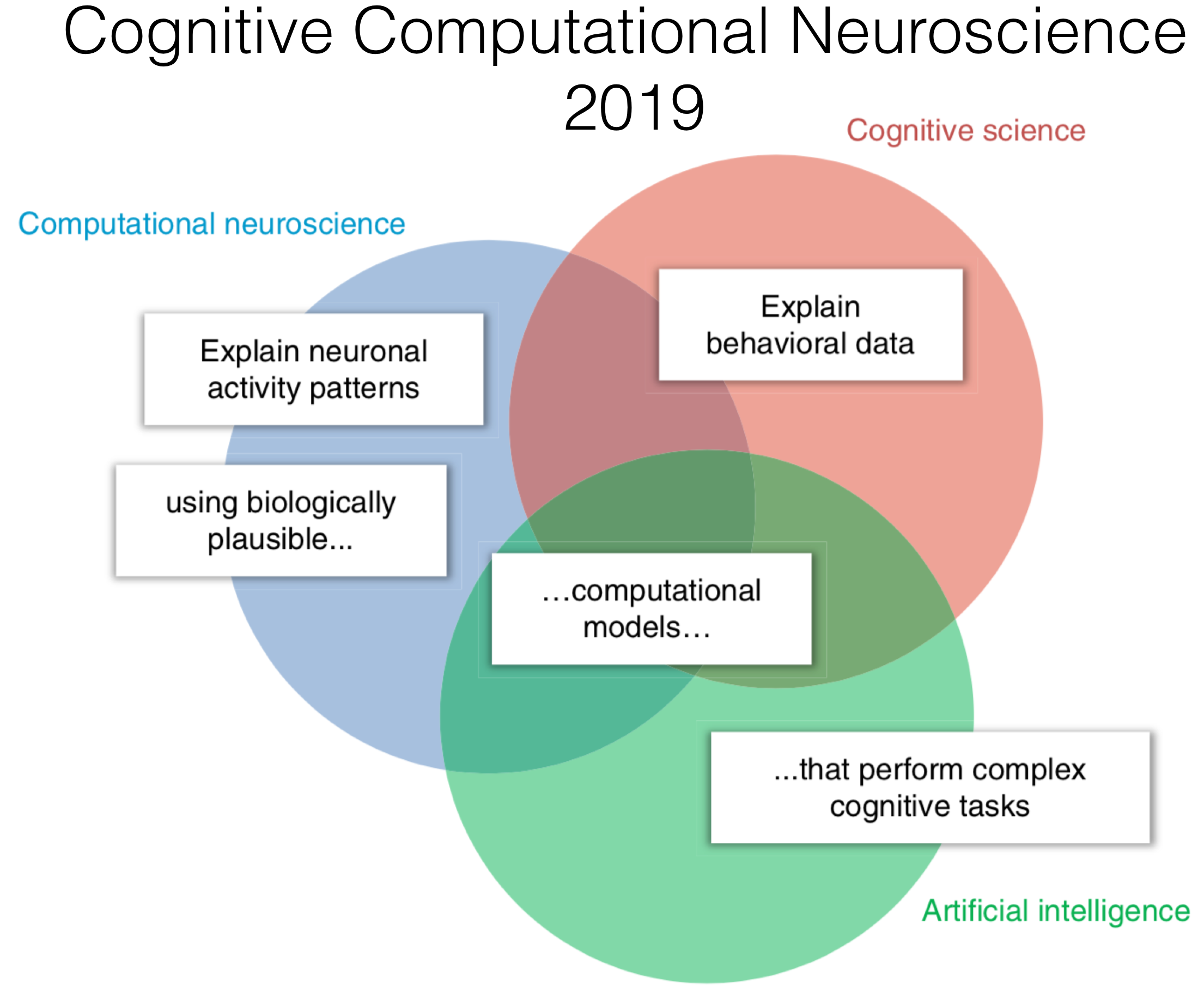

The Cognitive Computational Neuroscience (CCN; Kriegeskorte & Douglas, 2018) conference aims to enhance discussion and collaboration across exactly these fields: Cognitive & Systems Neuroscience as well as Machine Learning. And it has been growing immensely within the last three years since its birth. I love the idea and truly believe that evolution and our cognitive systems should inspire more Machine Learning Researchers. That is why I got super excited when my extended abstract was excepted for a poster presentation this year. In this post I share my perspective as an aspiring Reinforcement Learning researcher on what I got to learn!

The Cognitive Computational Neuroscience (CCN; Kriegeskorte & Douglas, 2018) conference aims to enhance discussion and collaboration across exactly these fields: Cognitive & Systems Neuroscience as well as Machine Learning. And it has been growing immensely within the last three years since its birth. I love the idea and truly believe that evolution and our cognitive systems should inspire more Machine Learning Researchers. That is why I got super excited when my extended abstract was excepted for a poster presentation this year. In this post I share my perspective as an aspiring Reinforcement Learning researcher on what I got to learn!

- Day -1: Thursday - 09-12 - Preparation

- Day 0: Friday - 09-13 - Tutorial Day

- Day 1: Saturday - 09-14 - Main I

- Day 2: Sunday - 09-15 - Main II

- Day 3: Monday - 09-16 - Main III

- Memo to Myself: Concluding with a few Take-Aways

- References

Disclaimer: There was soo much going on during the course of the 4 day conference which I don’t get to cover here. This includes the panel discussion between Jeff Beck & Karl Friston, the breakout sessions as well as many contributed talks. I will focus on the main invited talks and my personal highlights.

Day -1: Thursday - 09-12 - Preparation

Everything is prepared: I got my poster printed and the presentation is rehearsed. I got the schedule printed out and my favorite talks, posters and sessions all highlighted. I am ready - at least that is what I am telling myself! My goals for the next days are pretty simple: Discover connections between Deep RL and cognitive neuroscience, to spell them out and to talk to many cognitive RL researchers (e.g. in computational psychiatry). People who develop & apply RL within cognitive sciences have a very different point of view as compared to your favorite Deep RL researcher. Instead of using the SOTA-fanciest architectures and massive distributed compute, cognitive neuroscience RL is often more interested in modeling sample efficient human behavior and extracting neural correlates of value, prediction errors & state representations. This naturally leads itself towards high-level model-based approaches.

In my opinion the Deep RL community can learn quite a bit & take inspiration to create new cognitive-inspired algorithms which make use of insights from cognitive neuroscience and psychology. And that is what I am aiming at!

This years CCN was co-located with the Bernstein Conference within the beautiful city of Berlin. Literally 5 minutes away from my office - which means: More time for science and socializing - but less tourism ![]() .

.

Day 0: Friday - 09-13 - Tutorial Day

Tutorial: Representing States and Spaces

Day 0 had the tutorials in store. I decided to checkout “Representing states and spaces” by Tim Behrens and (“Neuro”-)Kim Stachenfeld. Sample efficiency, skill transfer and exploration are three key topics in current DRL research. And model-based approaches provide a powerful potential solution. Algorithms such as Dyna (Sutton, 1991) and PILCO (Deisenroth et al., 2011) try to iteratively infer the state transition dynamics and thereby allow to “hallucinate” transitions without actually having to ask the oracle (aka environment ![]() ). This allows for the notion of ‘planning’ - something that has long been associated with the prefrontal cortex & the hippocampal circuit. In order to infer such dynamics we need to get the representation of states right.

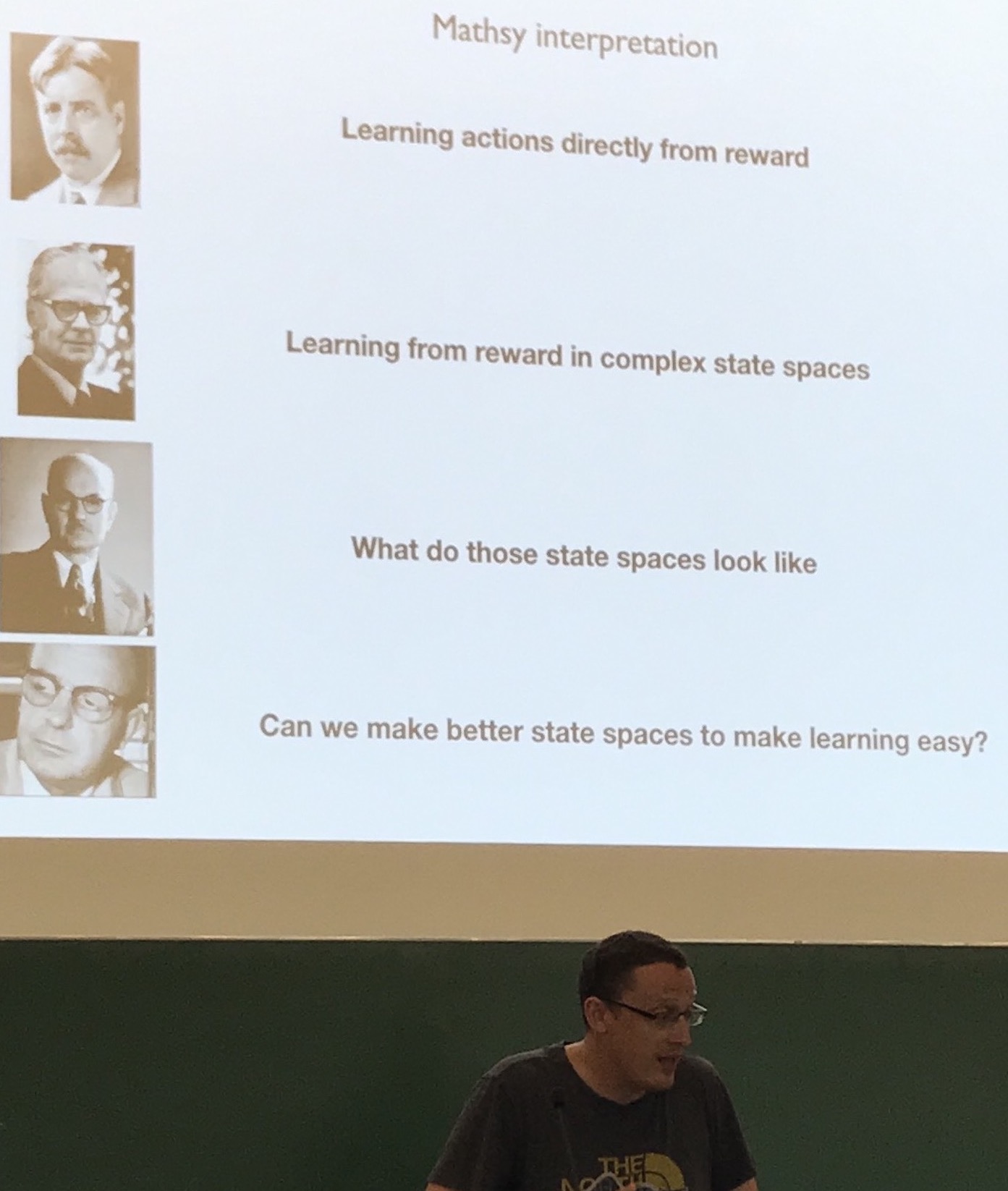

In the tutorial we covered a range of theories ranging from original value/reward-based learning work by Thorndike and Pavlow to more model-based foundations of Tolman and Harlow.

). This allows for the notion of ‘planning’ - something that has long been associated with the prefrontal cortex & the hippocampal circuit. In order to infer such dynamics we need to get the representation of states right.

In the tutorial we covered a range of theories ranging from original value/reward-based learning work by Thorndike and Pavlow to more model-based foundations of Tolman and Harlow.

At the core of such ideas lies the concept of cognitive maps (Behrens et al., 2018) and the importance of representing states in a task-efficient manner. Many of the results discussed about hippocampal activity (theta sweeps as well as sharp wave ripples (SWR)) were new to me. Many people seem to be arguing for a mix of model-based and model-free RL systems. For example replay oberserved in SWRs (observed during rest/sleep) is interpreted as a model-based system training a model-free system (as in Dyna!). Furthermore, recent work by Mattar & Daw (2018) on optimal backups for Dyna appears to explain variability in hippocampal ripples. More recent work by Tim Behrens’ group shines some light on relational inference using grid-like codes in the hippocampal formation.

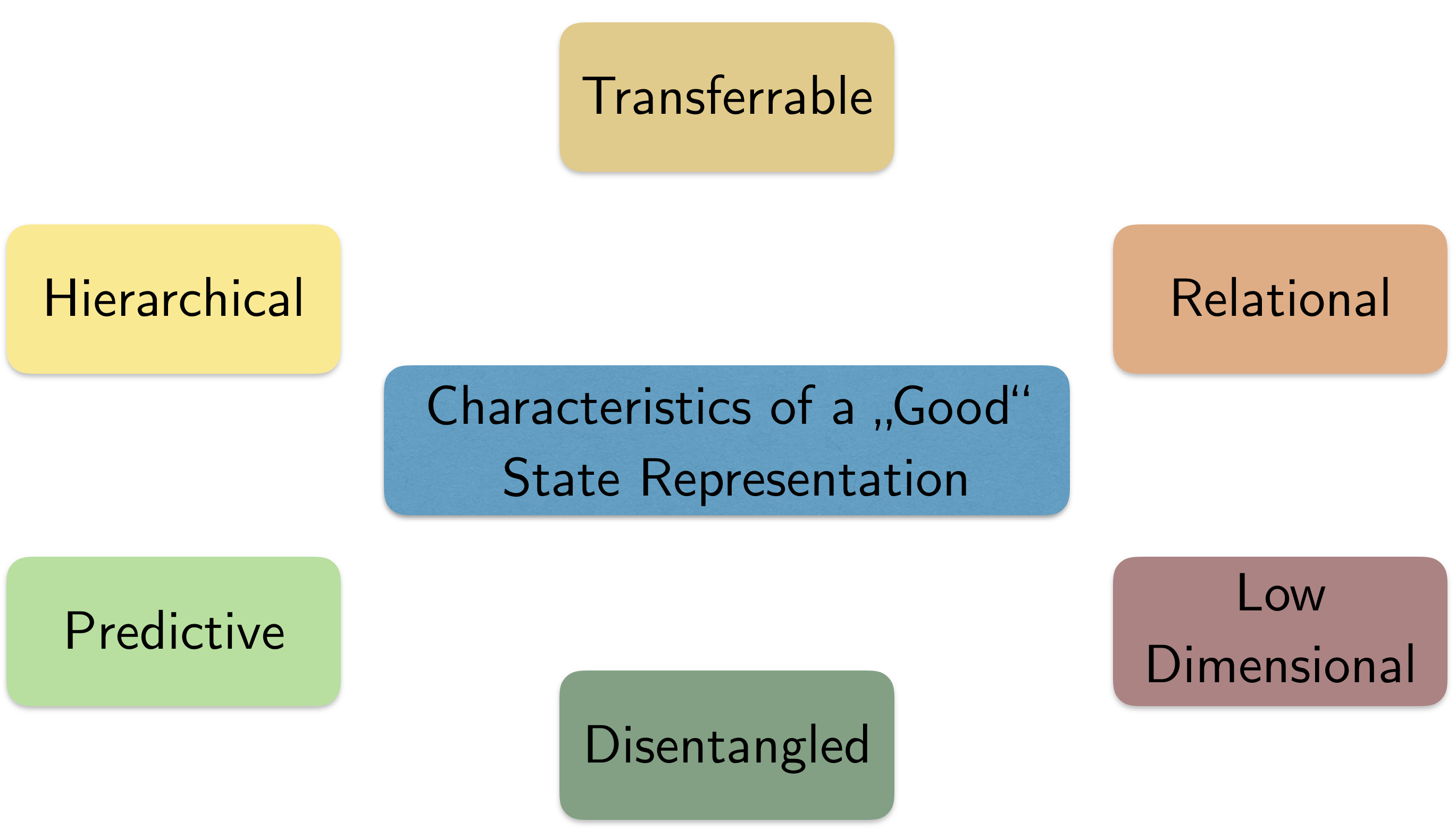

So what makes a good representation to solve a task? Tim and Kim gave a list of characteristics (see diagram). These can be more or less achieved with the help of successor representations which appear to have neural correlates in the hippocampus (e.g. Stachenfeld, 2018).

A key question that still remained unsolved to me was the one revolving around Markovianity. Classically the RL problem has been framed within the MDP setting. The Markov assumption entails that all transition dynamics (and rewards) are independent of the past given the present, i.e. $p(s’, r \mid s, a)$. Some times this assumption is violated by simple state formulations (as in ATARI games). In their original DQN work DeepMind simply stacks 4 frames to obtain a “state” which can capture the notion of velocity. RNNs can circumvent this somewhat ad-hoc state construction by integrating state transitions over time. But this essentially requires learning the Markov assumption. Which leads to my question: How can we disentangle learning a representation that resolves Markovianity and one that is helpful to solving the downstream task? Or is Markovianity even required to solve the task using powerful function approximators?! And why should the brain be Markovian in the first place? Memory efficiency is an unsatisfying argument.

A key question that still remained unsolved to me was the one revolving around Markovianity. Classically the RL problem has been framed within the MDP setting. The Markov assumption entails that all transition dynamics (and rewards) are independent of the past given the present, i.e. $p(s’, r \mid s, a)$. Some times this assumption is violated by simple state formulations (as in ATARI games). In their original DQN work DeepMind simply stacks 4 frames to obtain a “state” which can capture the notion of velocity. RNNs can circumvent this somewhat ad-hoc state construction by integrating state transitions over time. But this essentially requires learning the Markov assumption. Which leads to my question: How can we disentangle learning a representation that resolves Markovianity and one that is helpful to solving the downstream task? Or is Markovianity even required to solve the task using powerful function approximators?! And why should the brain be Markovian in the first place? Memory efficiency is an unsatisfying argument.

Liz Spelke: 6 Core Infant Knowledge Systems

In the evening the grand-opening keynote lecture was delivered by Liz Spelke. In her talk Prof. Spelke gave a brilliant overview of her work in developmental psychology. She introduced her talk by arguing that the highest general intelligence we know of is actually not achieved by the adult human brain, but by 0-5 year old infants ![]() .

.

Their adaptability as well as flexibility by far exceeds all our imagination. She went on to review 6 core infant knowledge systems (see picture). All of which are innate (as shown in multiple experiments, e.g. the ‘visual cliff’). I especially enjoyed this ‘nature’ focus on innate capabilities of infants. The structure of our environment has remained fairly stable. Distance for example has always behaved Euclidean. Hence, evolution and genetics provide a set of strong inductive biases (framed as knowledge systems) which facilitate rapid few-shot learning. Furthermore, Spelke provided experimental evidence that infants first learn to understand actions by others as causal before acting upon objects themselves. This really made me rethink about meta and self-supervised learning as a form causal learning tool that allows to encode many different “rough” skills in the weight configuration.

Finally, she highlighted the outstanding role of language which requires us to combine & compose the different knowledge systems. She hypothesized that language learning provides a general support for flexible imitation, concept as well as transfer learning. Something that is very much in line with my Masters thesis & in the context of Hierarchical Reinforcement Learning (insert shameless self-plug

Their adaptability as well as flexibility by far exceeds all our imagination. She went on to review 6 core infant knowledge systems (see picture). All of which are innate (as shown in multiple experiments, e.g. the ‘visual cliff’). I especially enjoyed this ‘nature’ focus on innate capabilities of infants. The structure of our environment has remained fairly stable. Distance for example has always behaved Euclidean. Hence, evolution and genetics provide a set of strong inductive biases (framed as knowledge systems) which facilitate rapid few-shot learning. Furthermore, Spelke provided experimental evidence that infants first learn to understand actions by others as causal before acting upon objects themselves. This really made me rethink about meta and self-supervised learning as a form causal learning tool that allows to encode many different “rough” skills in the weight configuration.

Finally, she highlighted the outstanding role of language which requires us to combine & compose the different knowledge systems. She hypothesized that language learning provides a general support for flexible imitation, concept as well as transfer learning. Something that is very much in line with my Masters thesis & in the context of Hierarchical Reinforcement Learning (insert shameless self-plug ![]() ).

).

Day 1: Saturday - 09-14 - Main I

Tim Behrens: The Tolman-Eichenbaum Machine

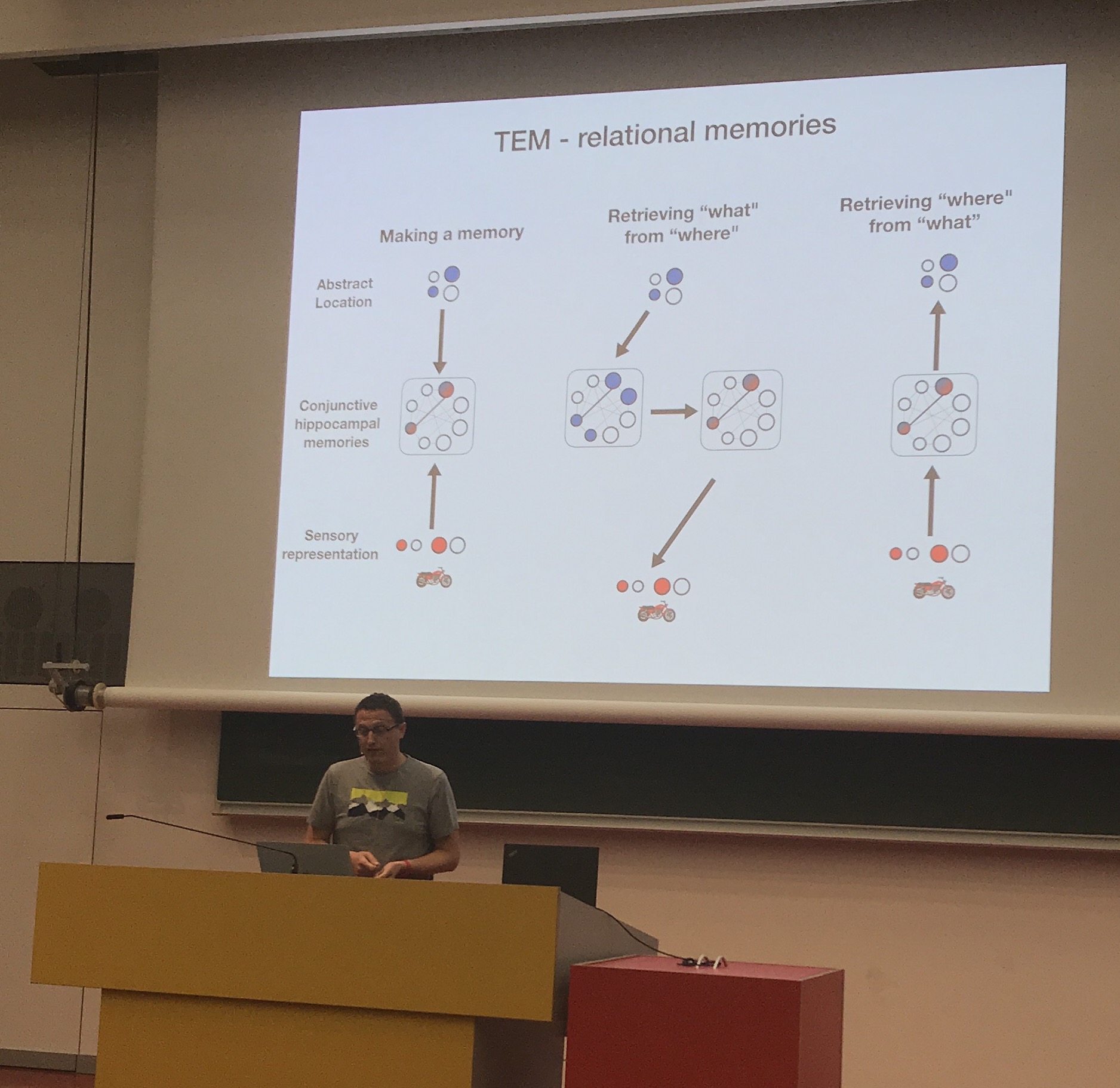

On Saturday the first talk was delivered by an energetic Tim Behrens who continued where we had left off during the tutorials - abstraction & inference in the frontal hippocampal circuit. He argued that structural knowledge allows for efficient inference. A special structure is given by the relation of objects which allows for reasoning with transitivity. And grid cells seem to be involved in such relational inference tasks.

He introduced the Tolman-Eichenbaum Machine (TEM) which allows to perform path integration/graph completion from limited data (Whittington et al., 2019). It combines two compartments: Relationship representation (learned via backprop) & Memory (learned via Hebbian plasticity).

The resulting relational memories can efficiently generalize to complete graphs as well as transfer knowledge fast. They show the applicability in simple gridworld environments where the TEM picks up the factorization of the concept of walls. So how might this help us to generalize across environments? The key word is hippocampal remapping. Tim argued that such remappings are by no means random but preserve the relational structure. Finally, he hypothesized that SWR might act as a form of training in a generative model such as the TEM.

He introduced the Tolman-Eichenbaum Machine (TEM) which allows to perform path integration/graph completion from limited data (Whittington et al., 2019). It combines two compartments: Relationship representation (learned via backprop) & Memory (learned via Hebbian plasticity).

The resulting relational memories can efficiently generalize to complete graphs as well as transfer knowledge fast. They show the applicability in simple gridworld environments where the TEM picks up the factorization of the concept of walls. So how might this help us to generalize across environments? The key word is hippocampal remapping. Tim argued that such remappings are by no means random but preserve the relational structure. Finally, he hypothesized that SWR might act as a form of training in a generative model such as the TEM.

Roshan Cools: Addressing Dopaminergic Trade-Offs

Next was Roshan Cools who presented new insights on dopamine & mental work. Neuromodulators such as dopamine and noradrenaline allow for flexibility within the connectome. In her talk Roshan put an emphasis on the dopamine modulated stability vs. flexibility trade-off. Intuitively, staying focused is fairly costly since it may lead to you being eaten by a tiger ![]() .

.

Classically, there has been a dual view (see picture) on how dopamine regulates focus. The striatal pathway influences reward-based decision making as in the reward prediction error hypothesis (flexibility) while the cortical pathway is responsible for cognitive control and short-term working memory (stability). A major challenge to current dopamine research revolves around the large variability of drugs targeting dopamine. One and the same dose of a drug can lead to beneficial concentration as well as addictive gambling behavior. It has been argued that this is due to a strong baseline dependency of the striatal pathway which in turn biases the PFC. Roshan showed some Dynamic Causal Modeling evidence for the role of striatal dopamine in cognitive gating. She hypothsized that the midbrain affects the dorsal striatum which in turn modulates the value of cognitive work. The next logical follow-up question becomes - what controls the midbrain? Roshan believes that it is the medial frontal cortex and that there are many more trade-offs (including different timescales, dorsal vs ventral striatum as well as the influence of environmental statistics on baseline levels)! All in all a great talk with a lot of food to think about

Classically, there has been a dual view (see picture) on how dopamine regulates focus. The striatal pathway influences reward-based decision making as in the reward prediction error hypothesis (flexibility) while the cortical pathway is responsible for cognitive control and short-term working memory (stability). A major challenge to current dopamine research revolves around the large variability of drugs targeting dopamine. One and the same dose of a drug can lead to beneficial concentration as well as addictive gambling behavior. It has been argued that this is due to a strong baseline dependency of the striatal pathway which in turn biases the PFC. Roshan showed some Dynamic Causal Modeling evidence for the role of striatal dopamine in cognitive gating. She hypothsized that the midbrain affects the dorsal striatum which in turn modulates the value of cognitive work. The next logical follow-up question becomes - what controls the midbrain? Roshan believes that it is the medial frontal cortex and that there are many more trade-offs (including different timescales, dorsal vs ventral striatum as well as the influence of environmental statistics on baseline levels)! All in all a great talk with a lot of food to think about ![]() .

.

A Matching of Minds

Afterwards, the awesome mind matching session took place. When registering participants were able to sign up for an informal exchange of ideas. Based on a list of submitted abstracts Konrad Körding’s matching algorithm paired up participants. In a series of 6 10 minute exchanges one got to talk about science, passion & projects. My abstracts all had to do either with Representational Similarity Analysis & Deep Reinforcement Learning. And all my matches worked in exactly these fields! I had many great discussions (including Tim Kietzmann and Maarten Speekenbrink). I was pleasantly surprised by how many senior researchers participated and shared their thoughts & project ideas. Ultimately, I would have wished to have a little bit more time! I often had to rush to the next match and to the following talk by Anne Churchland.

Anne Churchland: Importance of Uninstructed Movements

Anne displayed some outstanding results and hypotheses surrounding seemingly random movement (aka fidgeting). A key challenge of studying single-trial neural dynamics lies in untangling activity associated with movement and with cognition. In order to overcome such challenges her group used auditory/visual spatial detection task together 2-Photon imaging (without penetrating the mouse skull) as well behavior quantification from video frames. A fairly compute intense ridge regression analysis using temporal event kernels and filterless analog regressors (from the frames) resulted in a surprising insight: Uninstructed movement is dominating the recorded neural activity.

The magnitude of this observation is reduced with learning and time but does not vanish. Instead stable habits emerge which might indicate the beginning of a sequence of motor commands. I had never thought that random behavior & ticks might actually stabilize and provide function to higher level cognition. But her argument that such movements are too metabolically costly to not be relevant really stuck. Have a look at the recently published Nature Neuroscience paper. In general Anne’s talk was simply amazing. Very clear, concise and to the point. After every key insight she had a ‘sceptic’s corner’ section in which she addressed major points of critique. Absolute public speaking goals!

The magnitude of this observation is reduced with learning and time but does not vanish. Instead stable habits emerge which might indicate the beginning of a sequence of motor commands. I had never thought that random behavior & ticks might actually stabilize and provide function to higher level cognition. But her argument that such movements are too metabolically costly to not be relevant really stuck. Have a look at the recently published Nature Neuroscience paper. In general Anne’s talk was simply amazing. Very clear, concise and to the point. After every key insight she had a ‘sceptic’s corner’ section in which she addressed major points of critique. Absolute public speaking goals!

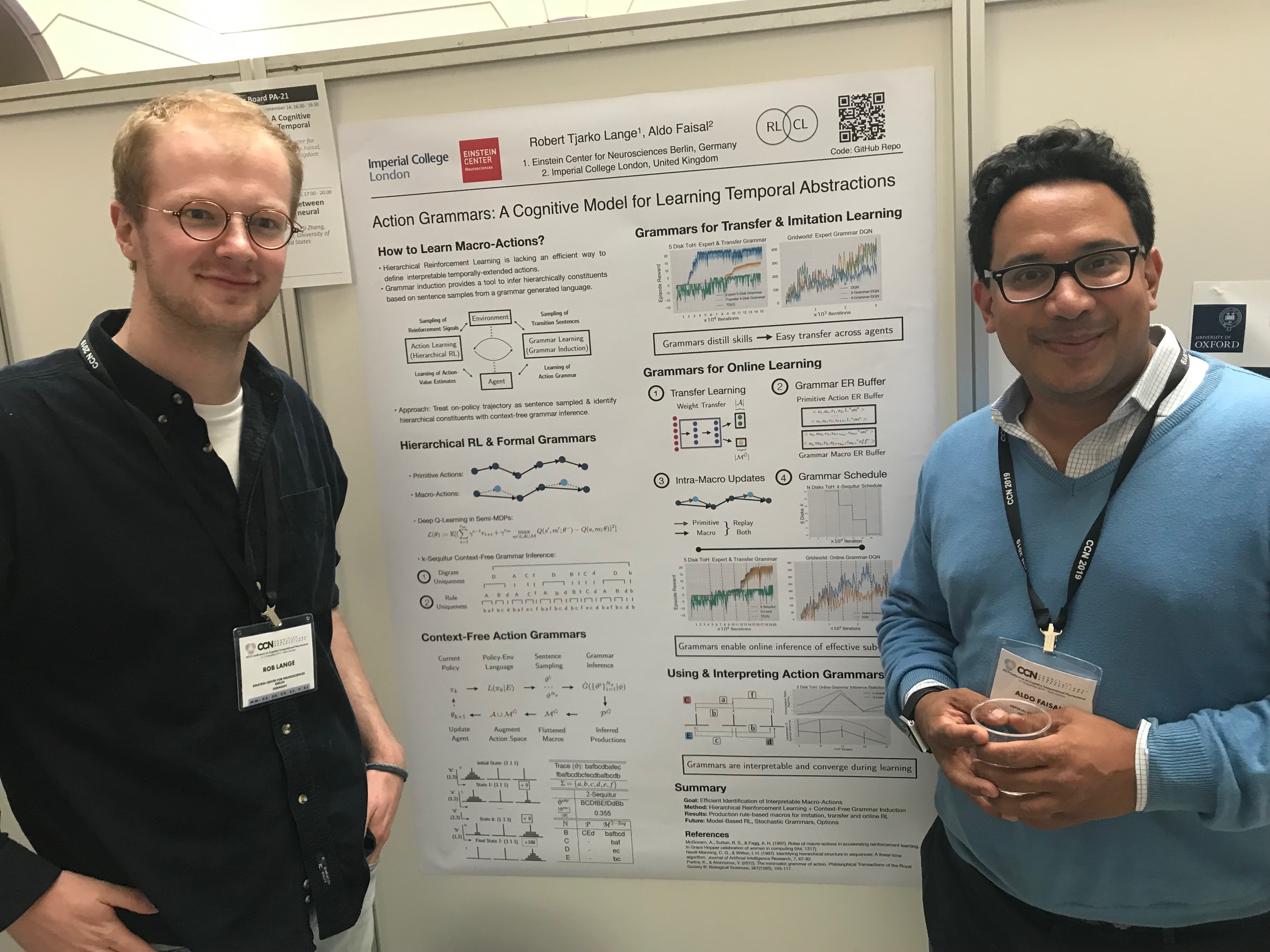

A Poster Session with Action Grammars

In the evening I had my first big-scale conference poster presentation. And man it was fun. I got to present my Imperial College Masters Thesis Project on Action Grammars (see poster below) supervised by Aldo Faisal. The idea is fairly simple: Our behavior is hierarchically structured. As is language. So let’s use tools from computational linguistics to define hierarchical sub-policies in the context of Hierarchical Reinforcement Learning. Turns out this helps in both distilling expert skills as well as online “reflecting” on successful habits.

If you are intrigued - check out the ArXiv preprint! By the end of the session I was super exhausted but also happy to have spoken to many interesting minds with great questions and inspirational thoughts. After a long dinner evening I was sooo ready for my bed. What a day.

Day 2: Sunday - 09-15 - Main II

Nando De Freitas: Co-Evolving Imitation & Abstraction

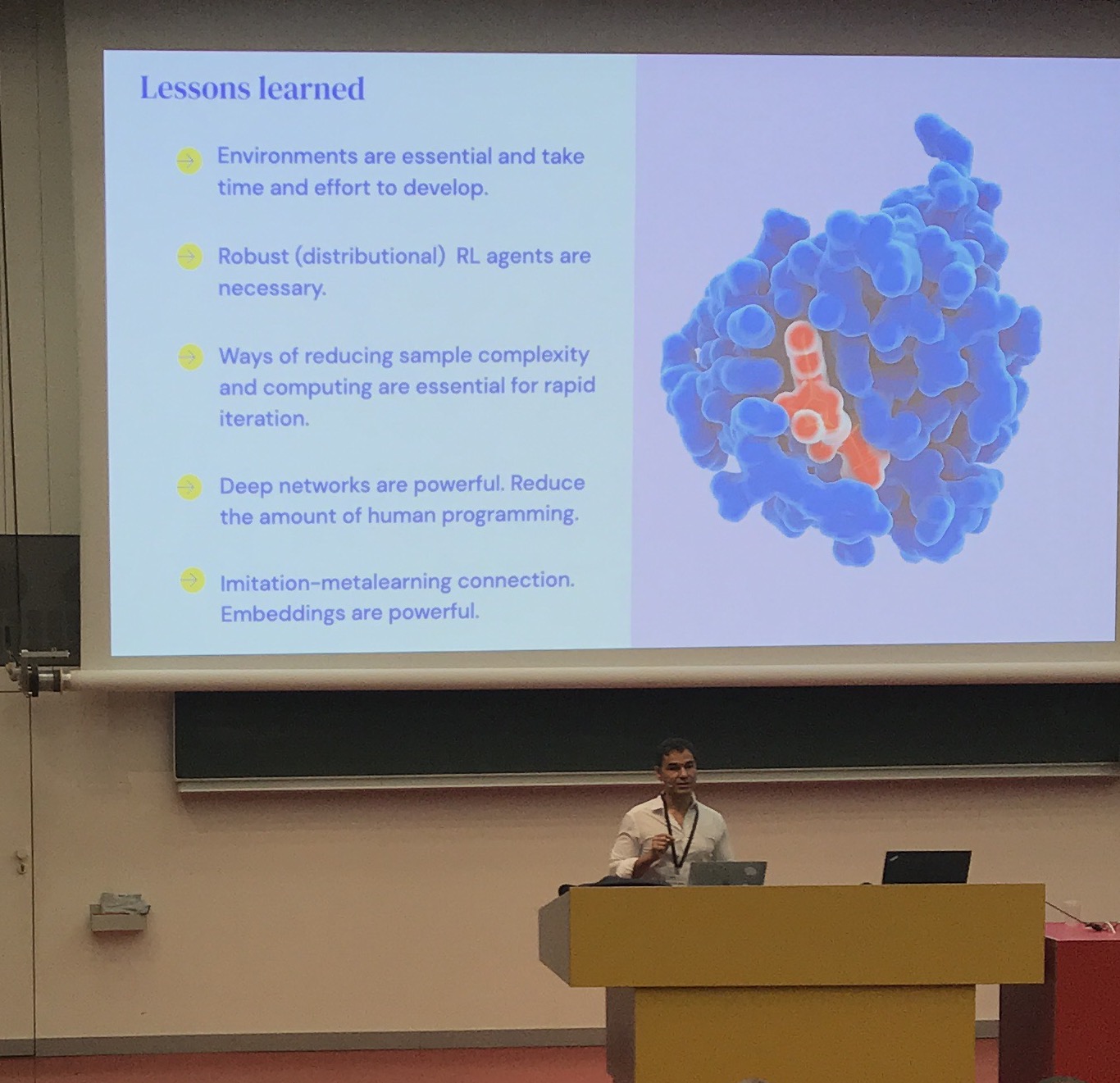

Meta-Learning is one of the hottest topic in Deep Learning. And it is all about fast adaptation to task-specific context. In the first talk of day 2 Nando De Freitas from DeepMind kicked things of with the hypothesis that imitation & abstraction have co-evolved.

More specifically, Nando argued that symbolic reasoning and distillation of skills allows for efficient exploration. He covered a series of conceptual frameworks (one-shot imitation, few-shot meta-learning as well as unsupervised learning) & highlighted many recent success stories (including MAML, Neural Programmer-Interpreters and Self-Supervised Learning). Nando very much pushed the idea of learning latent embedding vectors from demonstrations. These embeddings can then provide a form of behavioral description. Furthermore, the latent space allows to accommodate potentially infinite amounts of behaviors. By conditioning on a given set of video frames allows the network architecture to generalize at test time. The core idea can be summarized as: Learn a fixed core from demonstrations & flexibly interpolate in the latent embedding space. This idea also lies at the core of the evolutionary perspective of meta-learning and fast adaptation. The fixed core corresponds to an initialization that has been optimized on a slow timescale and using tons of data. The embedding, on the other hand, can be rapidly adapted (within our lifetime, e.g. via plasticity) using only a few SGD steps or permutations.

More specifically, Nando argued that symbolic reasoning and distillation of skills allows for efficient exploration. He covered a series of conceptual frameworks (one-shot imitation, few-shot meta-learning as well as unsupervised learning) & highlighted many recent success stories (including MAML, Neural Programmer-Interpreters and Self-Supervised Learning). Nando very much pushed the idea of learning latent embedding vectors from demonstrations. These embeddings can then provide a form of behavioral description. Furthermore, the latent space allows to accommodate potentially infinite amounts of behaviors. By conditioning on a given set of video frames allows the network architecture to generalize at test time. The core idea can be summarized as: Learn a fixed core from demonstrations & flexibly interpolate in the latent embedding space. This idea also lies at the core of the evolutionary perspective of meta-learning and fast adaptation. The fixed core corresponds to an initialization that has been optimized on a slow timescale and using tons of data. The embedding, on the other hand, can be rapidly adapted (within our lifetime, e.g. via plasticity) using only a few SGD steps or permutations.

Bernhard Schölkopf: Causal Learning the Universe

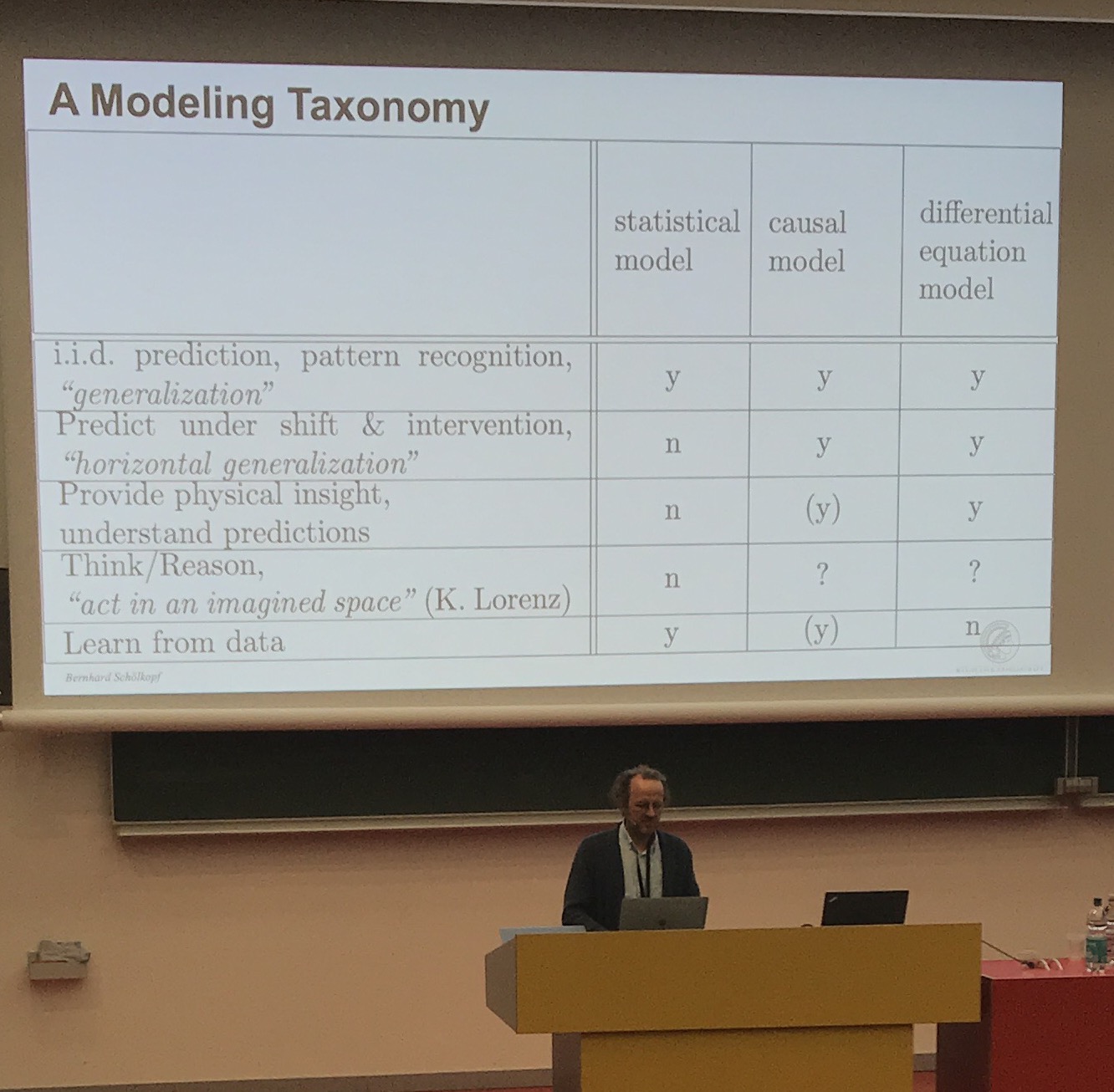

Afterwards, Bernhard Schölkopf presented some of his work on causal learning including some exciting results on extrapolating between planets in the universe.

Most of modern SOTA Machine Learning algorithms assume an iid assumption which can easily be exploited by adversarial examples. By optimizing mutual information between labels and feature-based predictions (symmetry), we crucially disregard innate causal relationships (asymmetry). Structural causal models, on the other hand, allow to reason about directional relationships and to provide a mechanistic point of view. Schölkopf discussed recent endeavors to link causal and statistical structure with the help of invariance. Intuitively, we humans learn about objects by manipulating them and our point of view. We can thereby infer their nature by conducting a series of natural experiments which relies upon the object not changing its identity (aka being invariant to our transformations). And in order to do so, we rely upon good representations (something Yoshua Bengio has been praising forever!). Finally, Schölkopf concluded by highlighting a recent application of causal learning to help generalize between images of lightyear-separated planets. Based on both planets sharing common parent nodes he and his collaborators were able to predict the existence and properties of until then undiscovered planets. Truly belly warming

Most of modern SOTA Machine Learning algorithms assume an iid assumption which can easily be exploited by adversarial examples. By optimizing mutual information between labels and feature-based predictions (symmetry), we crucially disregard innate causal relationships (asymmetry). Structural causal models, on the other hand, allow to reason about directional relationships and to provide a mechanistic point of view. Schölkopf discussed recent endeavors to link causal and statistical structure with the help of invariance. Intuitively, we humans learn about objects by manipulating them and our point of view. We can thereby infer their nature by conducting a series of natural experiments which relies upon the object not changing its identity (aka being invariant to our transformations). And in order to do so, we rely upon good representations (something Yoshua Bengio has been praising forever!). Finally, Schölkopf concluded by highlighting a recent application of causal learning to help generalize between images of lightyear-separated planets. Based on both planets sharing common parent nodes he and his collaborators were able to predict the existence and properties of until then undiscovered planets. Truly belly warming ![]()

![]() .

.

Day 3: Monday - 09-16 - Main III

Máté Lengyel: Bayesian Internal Models

After a somewhat disappointing discussion on the Free-Energy Principle (or framework?) I was happily surprised by Máté Lengyel’s talk. He spoke about Bayesian internal models and a fairly disciplined series of experiment-theory matches. Internal models define mental representations of our surroundings. This includes sensory perception, decision making, concept knowledge and navigation.

Máté structured the talk in 3 parts and provided a probabilistic modeling perspective for each of them: (1) Adaptation to changes in the environment. (2) Task-Modality-Independence. (3) Neural Substractes of Uncertainty. My main take-aways are the following:

- As the complexity of an environment and/or task increases a Bayesian Learner outperforms an Associative Learner. The Bayesian agent is able to generalize with the help of latent variable inference.

- Subjects appear to have a similar priors across tasks with shared structure. But across subjects the priors vary quite a bit.

- Amortized inference in latent variable models may allow us to fit neural dynamics which underlie uncertainty representations such as variability, oscillations and transitions.

Nathan Daw: Dopaminergic Heterogeneity and RL

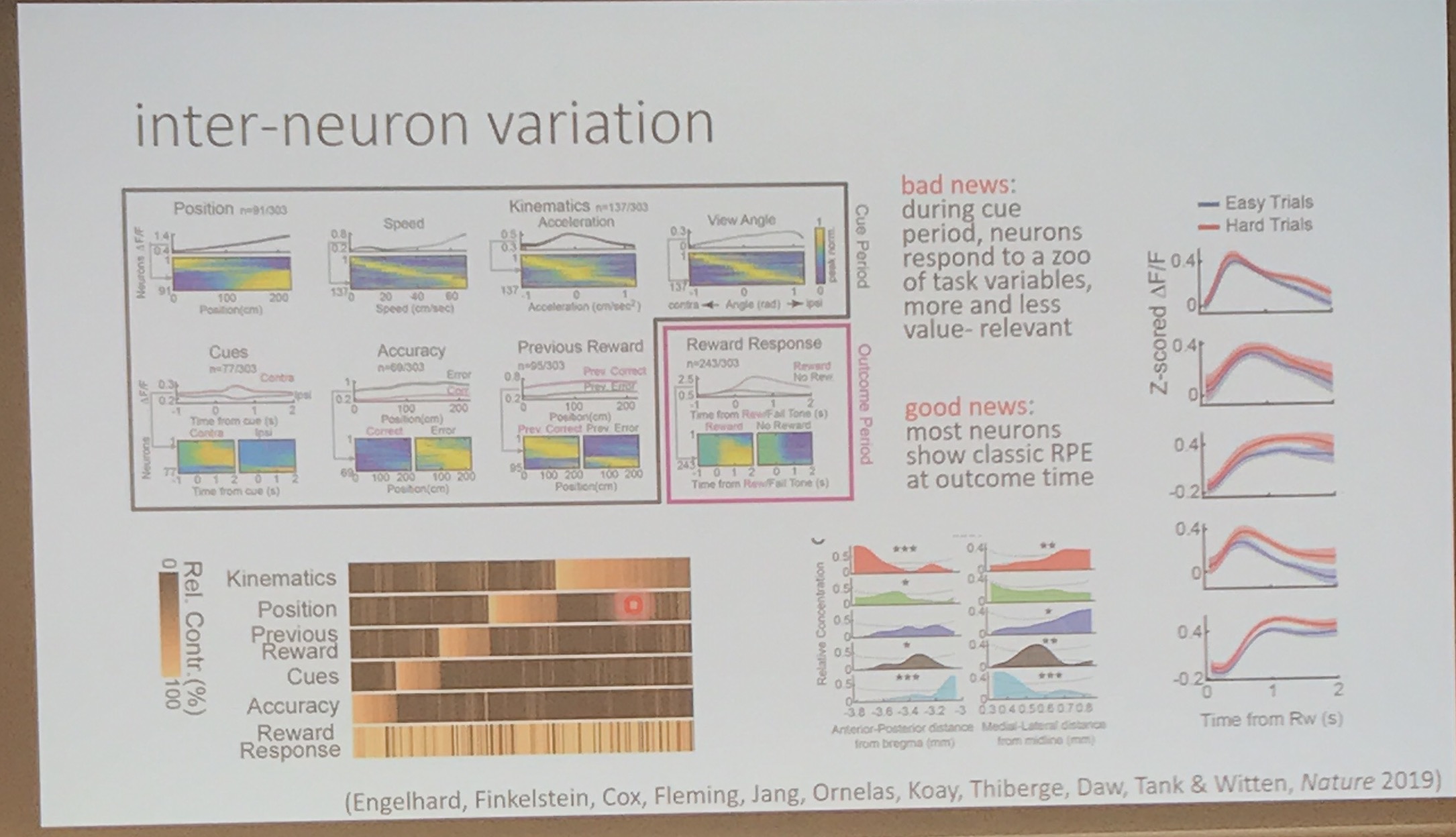

Since I had to start my volunteering duties at the Bernstein conference, my last CCN talk was delivered by Nathan Daw. The classic RPE-Dopamine story by (Schultz et al., 1997) showed that the average activity of a population of dopaminergic neurons is highly correlated with the magnitude and sign of the temporal difference error $\delta$.

\[V(s) \leftarrow V(s) + \alpha \delta_t\]But dopaminergic neurons actually exhibit quite some heterogeneity. Some fire more strongly and easier, some don’t.

Furthermore, states representations are often times oversimplified in simulations. But experiments by Engelhard et al. (2019) convincingly argue that dopaminergic neurons’ response varies significantly with task variable during a cue period. Only during reward time the classical RPE dynamics can be observed. Since TD learning is only interested in estimating the mean discounted return of being in a state (given a policy), these differences between individual neurons is washed away.

So the $\delta_t$ is an aggregate scalar number composed of many different neuron-specific compartments.

Furthermore, states representations are often times oversimplified in simulations. But experiments by Engelhard et al. (2019) convincingly argue that dopaminergic neurons’ response varies significantly with task variable during a cue period. Only during reward time the classical RPE dynamics can be observed. Since TD learning is only interested in estimating the mean discounted return of being in a state (given a policy), these differences between individual neurons is washed away.

So the $\delta_t$ is an aggregate scalar number composed of many different neuron-specific compartments.

The resulting model is still the same but allows to study different value channels. Nathaniel showed how to translate such ideas to the ATARI domain and highlighted some benefits of studying neuron-specific RPEs (i.e. for exploration & uncertainty quantification).

Note: This work is very much related to recent results by Will Dabney, Matt Bovinick, Zeb Kurth-Nelson & Nao Ushida. They merge a distributional RL perspective with the observed dopaminergic heterogeneity. Furthermore, they show how this allows dopaminergic neurons to express different levels of optimism.

Memo to Myself: Concluding with a few Take-Aways

I definitely made a few mistakes leading up & during the conference. So let me share some of them - so I won’t repeat them & you don’t either ![]() :

:

- Take care of yourself: It is really important to get enough sleep. Even the smartest fellow will loose concentration with only 5 hours of sleep. Therefore, take an evening off. Spend some time on your own & don’t fear to miss out. You almost always will! There is just too much going. Also: Stay hydrated!

- Try to be critical of other people’s work: A little while ago I basically loved all of science. Accepted all claims and got fascinated by all approaches. But the more work I see & do myself, the more critical I have become. Especially when claims are based on noisy neuroimaging data. My current solution is to be Bayesian about the presented science and to trade-off expectations & uncertainty.

-

Talk, Connect & Share: The science that you get to experience at a conference is all good. There was some immense value in seeing what people currently are working on & what is trendy. But talking to junior and senior researchers, mingling and re-connecting with old friends was by far the best part of CCN. People make science beautiful. So embrace it by trying to make their science better (as far as you can

)!

)!

My favorite part of the whole conference was by far the mind matching and getting to know so many awesome people. A big thank you to all the organizers as well as volunteers, speakers and participants ![]() . On that note - See you next year in San Francisco!

. On that note - See you next year in San Francisco!

Love, Rob

P.S.: Here are some handwritten notes you can check out:

- Day 0: Including the tutorial and talk by Tim Behrens, Kim Stachenfeld & Liz Spelke.

- Day 1: Including talks by Tim Behrens, Roshan Cools and Anne Churchland.

- Day 2: Including talks by Nando de Freitas, Bernhard Schölkopf & the FEP Panel.

- Day 3: Including talks by Máté Lengyel & Nathaniel Daw.

References

- Kriegeskorte, N., and P. K. Douglas. (2018): “Cognitive computational neuroscience,” Nature neuroscience, 21, 1148–60.

- Sutton, R. S. (1991): “Dyna, an integrated architecture for learning, planning, and reacting,” ACM Sigart Bulletin, 2, 160–63.

- Deisenroth, M., and C. E. Rasmussen. (2011): “PILCO: A Model-Based and Data-Efficient Approach to Policy Search,” Proceedings of the 28th International Conference on machine learning (ICML-11), .

- Stachenfeld, K. (2018): “Learning Neural Representations That Support Efficient Reinforcement Learning,”Princeton University.

- Mattar, M. G., and N. D. Daw. (2018): “Prioritized memory access explains planning and hippocampal replay,” Nature neuroscience, 21, 1609.

- Whittington, J. C. R., T. H. Muller, S. Mark, C. Barry, N. Burgess, and T. E. J. Behrens. (2019): “The Tolman-Eichenbaum Machine: Unifying space and relational memory through generalisation in the hippocampal formation,” bioRxiv, , 770495.

- Behrens, T. E. J., T. H. Muller, J. C. R. Whittington, S. Mark, A. B. Baram, K. L. Stachenfeld, and Z. Kurth-Nelson. (2018): “What is a cognitive map? Organizing knowledge for flexible behavior,” Neuron, 100, 490–509.

- Engelhard, B., J. Finkelstein, J. Cox, W. Fleming, H. J. Jang, S. Ornelas, S. A. Koay, et al. (2019): “Specialized coding of sensory, motor and cognitive variables in VTA dopamine neurons,” Nature, , 1.

- Schultz, W., P. Dayan, and P. R. Montague. (1997): “A neural substrate of prediction and reward,” Science, 275, 1593–99.