My Top 10 Deep RL Papers of 2019

Published:

2019 - What a year for Deep Reinforcement Learning (DRL) research - but also my first year as a PhD student in the field. Like every PhD novice I got to spend a lot of time reading papers, implementing cute ideas & getting a feeling for the big questions. In this blog post I want to share some of my highlights from the 2019 literature.

In order to give this post a little more structure, I decided to group the papers into 5 main categories and selected a winner as well as runner-up. Without further ado, here is my top 10 DRL papers from 2019.

Disclaimer: I did not read every DRL paper from 2019 (which would be quite the challenge). Instead I tried to distill some key narratives as well as stories that excite me. So this is my personal top 10 - let me know if I missed your favorite paper! ![]()

![]()

![]()

Category I: Large-Scale Projects

Most of pre-2019 breakthrough accomplishments of Deep RL (e.g., ATARI DQNs, AlphaGo/Zero) have been made in domains with limited action spaces, fully observable state spaces as well as moderate credit assignment time-scales. Partial observability, long time-scales as well vast action spaces remained illusive. 2019, on the other hand, proved that we are far from having reached the limits of combining function approximation with reward-based target optimization. Challenges such as Quake III/’Capture the Flag’, StarCraft II, Dota 2 as well as robotic hand manipulation highlight only a subset of exciting new domains which modern DRL is capable of tackling. I tried to choose the winners for the first category based on the scientific contributions and not only the massive scaling of already existing algorithms. Everyone - with enough compute power - can do PPO with crazy batchsizes.

| Title | Authors | Affiliation | Key Contributions | Link | Notes | |

|---|---|---|---|---|---|---|

| Grandmaster level in StarCraft II using multi-agent reinforcement learning | Vinyals et al. | DeepMind | Scatter Connections + League/PFSP | Click | Click | |

| Solving Rubik’s Cube with a Robot Hand | OpenAI | OpenAI | Automatic Domain Randomization | Click | Click |

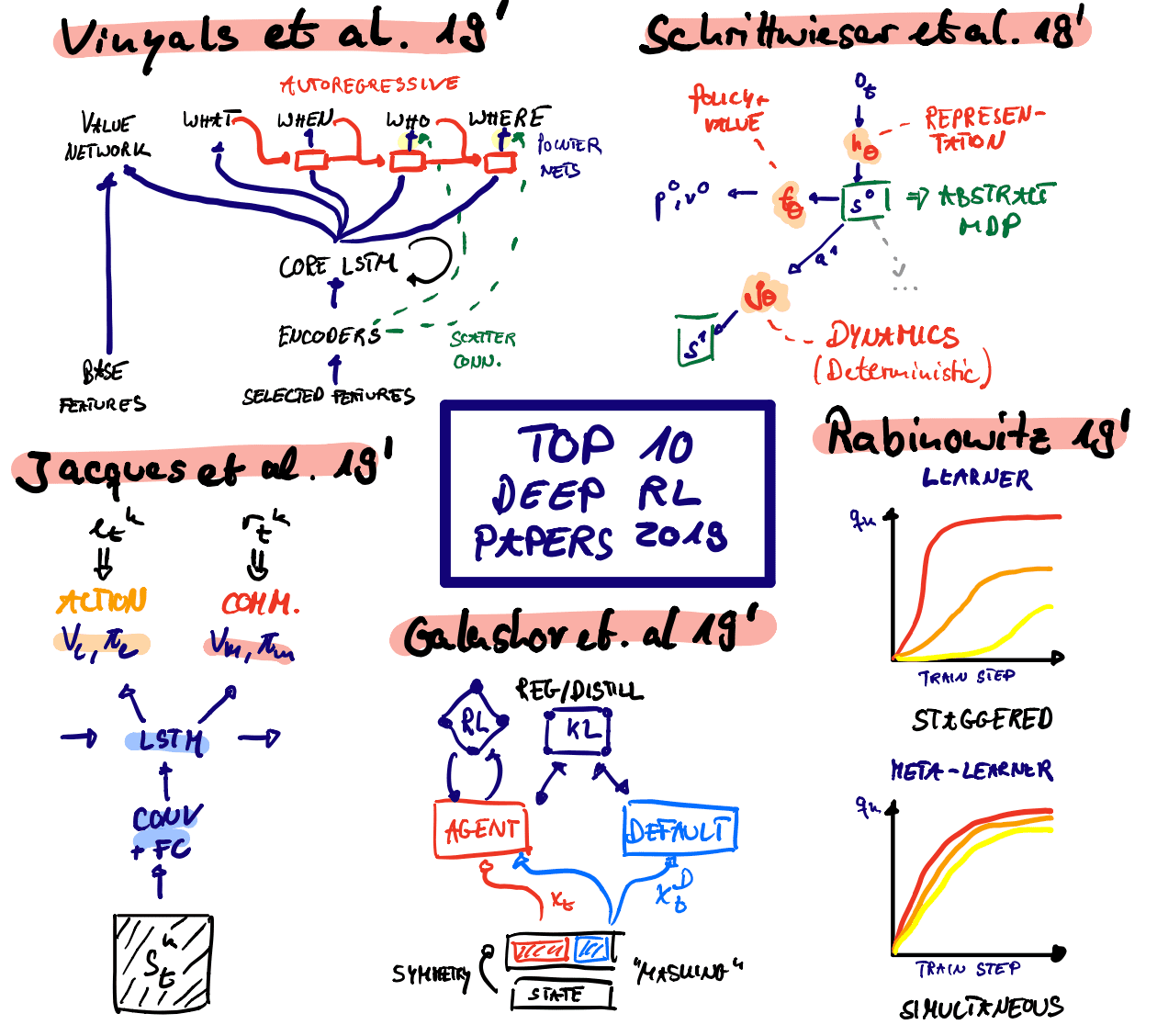

![]() - DeepMind’s AlphaStar (Vinyals et al, 2019)

- DeepMind’s AlphaStar (Vinyals et al, 2019)

“And the first place in the category ‘Large-Scale DRL Projects’ goes to…” (insert awkward opening of an envelope with a microphone in one hand) + ![]() : DeepMind’s AlphaStar project led by Oriol Vinyals. While reading the Nature paper, I realized that the project is very much based on the FTW setup used to tackle Quake III: Combine a distributed IMPALA actor-learner setting with a powerful prior that induces structured exploration.

: DeepMind’s AlphaStar project led by Oriol Vinyals. While reading the Nature paper, I realized that the project is very much based on the FTW setup used to tackle Quake III: Combine a distributed IMPALA actor-learner setting with a powerful prior that induces structured exploration.

While FTW uses a prior based on a time-scale hierarchy of two LSTMs, AlphaStar makes use of human demonstrations. The expert demonstrations are used to pre-train the policy of the agent via supervised minimization of a KL objective & provide an efficient regularization to ensure that the exploration behavior of the agent is not drowned by StarCraft’s curse of dimensionality. But this is definitely not all there is. The scientific contributions include a unique version of prioritized fictitious self-play (aka The League), an autoregressive decomposition of the policy with pointer networks, upgoing policy update (UPGO - an evolution of the V-trace Off-Policy Importance Sampling correction for structured action spaces) as well as scatter connections (a special form of embedding that maintains spatial coherence of the entities in map layer). Personally, I really enjoyed how much DeepMind and especially Oriol Vinyals cared for the StarCraft community. Often times science fiction biases our perception towards thinking that ML is an arms race. But it is human made & purposed to increase our quality of life.

![]() - OpenAI’s Solving’ of the Rubik’s Cube (OpenAI, 2019)

- OpenAI’s Solving’ of the Rubik’s Cube (OpenAI, 2019)

It has been well known that Deep Learning is equipped to solve tasks which require the extraction & manipulation of high-level features. Low-level dexterity, on the other hand, a capability so natural to us, provides a major challenge for current systems. Or so we thought ![]() . My favorite contribution of OpenAI’s dexterity efforts is Automatic Domain Randomization (ADR): A key challenge for training Deep RL agents on robotic tasks is to transfer what was learned in simulation to the physical robot. Simulators only capture a limited set of mechanisms in the real world & accurately simulating friction demands computation time. Time that is costly & could otherwise be used to generate more (but noisy) transitions in environment. Domain Randomization has been proposed to obtain a robust policy. Instead of training the agent on a single environment with a single set of environment-generating hyperparameters, the agent is trained on a plethora of different configurations. ADR aims to design a curriculum of environment complexities to maximize learning progress. By automatically increasing/decreasing the range of possible environment configurations based on the learning progress of the agent, ADR provides a pseudo-natural curriculum for the agent. Astonishingly, this (together with a PPO-LSTM-GAE-based policy) induces a form of meta-learning that apparently appears to have not yet reached its full capabilities (by the time of publishing).

There has been a lot of Twitter talk about the word ‘solve’. The algorithm did not ‘fully’ learn end-to-end what the right sequence of moves is to solve a cube & then do the dexterous manipulation required. But honestly, what is more impressive: In-hand manipulation with crazy reward sparsity or learning a fairly short sequence of symbolic transformations? Woj Zaremba mentioned at the ‘Learning Transferable Skills’ workshop at NeurIPS 2019 that it took them one day to ‘solve the cube’ with DRL & that it is possible to do the whole charade fully end-to-end. That is impressive.

. My favorite contribution of OpenAI’s dexterity efforts is Automatic Domain Randomization (ADR): A key challenge for training Deep RL agents on robotic tasks is to transfer what was learned in simulation to the physical robot. Simulators only capture a limited set of mechanisms in the real world & accurately simulating friction demands computation time. Time that is costly & could otherwise be used to generate more (but noisy) transitions in environment. Domain Randomization has been proposed to obtain a robust policy. Instead of training the agent on a single environment with a single set of environment-generating hyperparameters, the agent is trained on a plethora of different configurations. ADR aims to design a curriculum of environment complexities to maximize learning progress. By automatically increasing/decreasing the range of possible environment configurations based on the learning progress of the agent, ADR provides a pseudo-natural curriculum for the agent. Astonishingly, this (together with a PPO-LSTM-GAE-based policy) induces a form of meta-learning that apparently appears to have not yet reached its full capabilities (by the time of publishing).

There has been a lot of Twitter talk about the word ‘solve’. The algorithm did not ‘fully’ learn end-to-end what the right sequence of moves is to solve a cube & then do the dexterous manipulation required. But honestly, what is more impressive: In-hand manipulation with crazy reward sparsity or learning a fairly short sequence of symbolic transformations? Woj Zaremba mentioned at the ‘Learning Transferable Skills’ workshop at NeurIPS 2019 that it took them one day to ‘solve the cube’ with DRL & that it is possible to do the whole charade fully end-to-end. That is impressive.

Category II: Model-Based RL

While the previous two projects are exciting show-cases of the potential for DRL, they are ridiculously sample-inefficient. I don’t want to know the electricity bill, OpenAI & DeepMind have to pay. Good thing that there are people working on increasing the sample (but not necessarily computational) efficiency via hallucinating in a latent space. Traditionally, Model-Based RL has been struggling with learning the dynamics of high-dimensional state spaces. Usually a lot of the model capacity had to be “wasted” on non-relevant parts of the state space (e.g. the most outer pixels of an ATARI frame) which was rarely relevant to success. More recently, there have multiple proposals to do planning/imagination in an abstract space (i.e., an Abstract MDP). And these are my two favorite approaches:

| Title | Authors | Affiliation | Key Contributions | Link | Notes | |

|---|---|---|---|---|---|---|

| Mastering ATARI, Go, Chess and Shogi by planning with a learned model | Schrittwieser et al. | DeepMind | MuZero | Click | Click | |

| Dream to Control: Learning Behaviors by Latent Imagination | Hafner et al. | Google Brain | Dreamer | Click | Click |

![]() - MuZero (Schrittwieser et al., 2019)

- MuZero (Schrittwieser et al., 2019)

MuZero provides the next iteration in removing constraints from the AlphaGo/AlphaZero project. Specifically, it overcomes the endorsement of the transition dynamics. Thereby, the general MCTS + function approximation toolbox is opened to more general problem settings such as vision-based problems (such as ATARI).

The problem is reduced to a regression which predicts rewards, values & policies & the learning of a representation function $h_\theta$ which maps an observation to an abstract space, a dynamics function $g_\theta$ as well as a policy and value predictor $f_\theta$. Planning may then be done by unrolling the deterministic dynamics model in the latent space given the embedded observation. As before the next action is selected based on the MCTS rollout & sampling proportionately to the visit count. The entire architecture is trained end-to-end using BPTT & outperforms AlphaGo as well as ATARI baselines in the low sample regime. Interestingly, being able to model rewards, values & policies appears to be all that is needed to plan effectively. The authors state that planning in latent space also opens up the application of MCTS in environments with stochastic transitions - pretty exciting if you ask me.

![]() - Dreamer (aka. PlaNet 2.0; Hafner et al., 2019)

- Dreamer (aka. PlaNet 2.0; Hafner et al., 2019)

Dreamer, on the other hand, provides a principled extension to continuous action spaces that is able to tame long-horizon tasks based on high-dimensional visual inputs. The representation learning problem is decomposed into iteratively learning a representation, transition and reward model. The overall optimization process is interleaved by training an actor-critic-based policy using imagined trajectories. Dreamer learns by propagating “analytical” gradients of learned state values through imagined trajectories of a world model. More specifically, stochastic gradients of multi-step returns are efficiently propagated through neural network predictions using the re-parametrization trick. The approach is evaluated in the DeepMind Control Suite and is able to control behavior based on $64 \times 64 \times 3$-dimensional visual input. Finally, the authors also compare different representation learning methods (reward prediction, pixel reconstruction & contrastive estimation/observation reconstruction) and show that pixel reconstruction usually outperforms constrastive estimation.

Category III: Multi-Agent RL

Agency goes beyond the simplistic paradigm of central control. Our day to day life is filled with situations which require anticipation & Theory of Mind. We constantly assume the reaction of other individuals and readjust our beliefs based on recent evidence. Naive independent optimization via gradient descent is prone to get stuck in local optima. This already becomes apparent in a simplistic society of two agent GAN training. Joint learning induces a form of non-stationarity in the environment which is the core challenge of Multi-Agent RL (MARL). The two selected MARL papers highlight two central points: Going from the classical centralized-training + decentralized control paradigm towards social reward shaping & the scaled use and unexpected results of self-play:

| Title | Authors | Affiliation | Key Contributions | Link | Notes | |

|---|---|---|---|---|---|---|

| Social Influence as Intrinsic Motivation for Multi-Agent Deep Reinforcement Learning | Jaques et al. | MIT | Reward Shaping for Decentralized Training | Click | Click | |

| Emergent tool use from multi-agent autocurricula | Baker et al. | OpenAI | Environment Curriculum Learning for Multi-Agent Setups | Click | Click |

![]() - Social Influence as Intrinsic Motivation (Jaques et al., 2019)

- Social Influence as Intrinsic Motivation (Jaques et al., 2019)

While traditional approaches to intrinsic motivation often have been ad-hoc and manually defined, this paper introduces a causal notion of social empowerment via pseudo-rewards resulting from influential behavior. The key idea is to reward actions that lead to relatively higher change in other agents’ behavior.

The concept of influence is thereby grounded in a counterfactual assessment: How would another agent’s action change if I had acted differently in this situation. The KL divergence between marginal and other-agent’s-action conditional policies can then be seen as a measure of social influence. The authors test the proposed intrinsic motivation formulation in a set of sequential social dilemma and provide evidence for enhanced emergent coordination. Furthermore, when allowing for vector-valued communication, social influence reward-shaping results in informative & sparse communication protocols. Finally, they get rid of centralized access to other agents policies by having agents learn to predict each others behavior, a soft-version of Theory of Mind.

![]() - Autocurricula & Emergent Tool-Use (OpenAI, 2019)

- Autocurricula & Emergent Tool-Use (OpenAI, 2019)

Strictly speaking this work by OpenAI may not be considered a pure MARL paper. Instead of learning a set of decentralized controllers, there is a central A3C-PPO-LSTM-GAE-based controller. Nonetheless, the training is performed using multi-agent self-play and the most simplistic reward one can imagine: Survival in a multi-agent game of hide-and-seek. The authors show how such a simplistic reward structure paired with self-play can lead to self-supervised skill acquisition that is more efficient than intrinsic motivation. In the words of the authors:

“When a new successful strategy or mutation emerges, it changes the implicit task distribution neighboring agents need to solve and creates a new pressure for adaptation.”

This emergence of an autocurriculum and disctinct plateus of dominant strategies ultimately led to unexpected solutions (such as surfing on objects). The agents undergo 6 distinct phases of dominant strategies where shifts are based on the interaction with tools in the environment. The hiders learn a division of labor - due to team-based rewards. Finally, a few interesting observations regarding large-scale implementation:

- Large batch-sizes are very important when training a centralized controller in MARL. They don’t only significantly stabilize learning but also allow for larger learning rates & bigger epochs.

- Conditioning the critic on state observations of all agents enables more robust feedback signals to the actors. This was an observation already made in the MA-DDPG paper by Lowe et al. (2017).

Category IV: Learning Dynamics

Learning dynamics in Deep RL remain far from being understood. Unlike supervised learning where the training data is somewhat given and treated as being IID (independent and identically distributed), RL requires an agent to generate their own training data. This can lead to significant instabilities (e.g. the Deadly Triad), something anyone who has toyed around with DQNs will have experienced. Still there have been some major theoretical breakthroughs revolving around new discoveries (such as Neural Tangent Kernels). The two winners of the dynamics category highlight essential characteristics of memory-based meta-learning (more general than just RL) as well as on-policy RL:

| Title | Authors | Affiliation | Key Contributions | Link | Notes | |

|---|---|---|---|---|---|---|

| Meta-learners’ learning dynamics are unlike learners’ | Rabinowitz | DeepMind | Empirical characterization of Meta-Learner’s inner loop dynamics | Click | Click | |

| Ray Interference: a Source of Plateaus in Deep Reinforcement Learning | Schaul et al. | DeepMind | Analytical derivation of plateau phenomenon in on-policy RL | Click | Click |

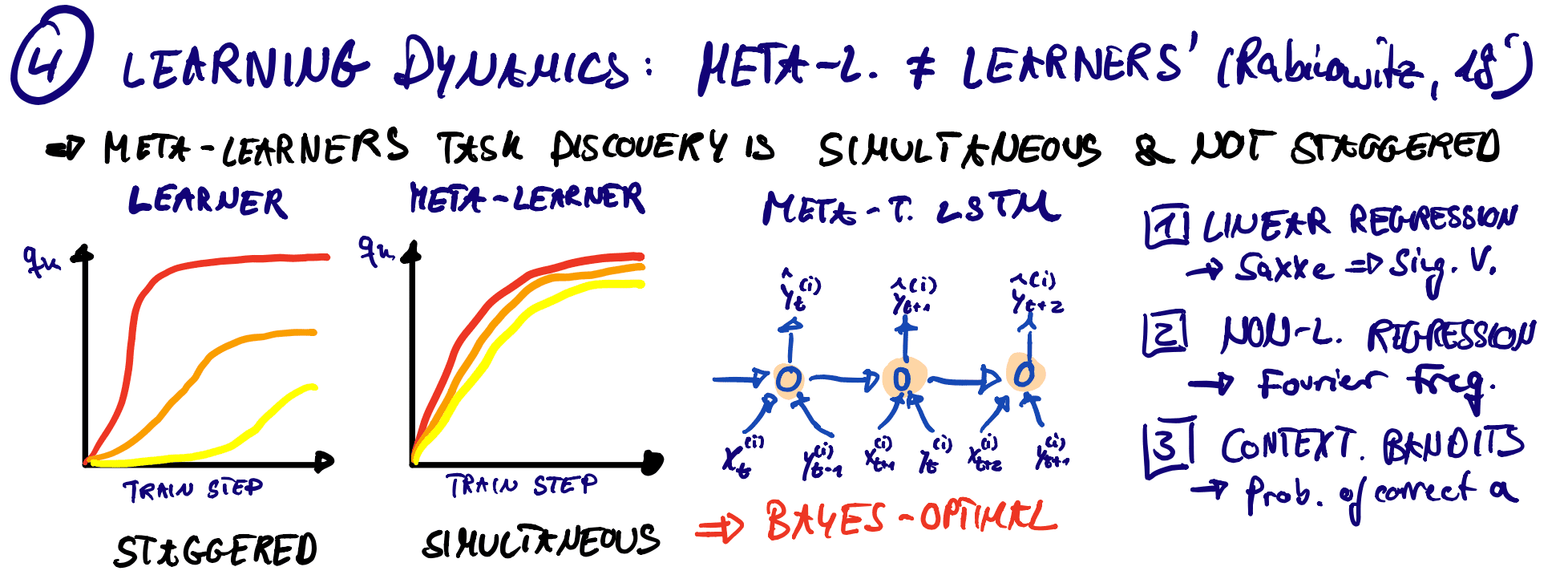

![]() - Non-Staggered Meta-Learner’s Dynamics (Rabinowitz, 2019)

- Non-Staggered Meta-Learner’s Dynamics (Rabinowitz, 2019)

Recently, there have been several advances in understanding the learning dynamics of Deep Learning & Stochastic Gradient Descent. These include the findings on staggered task discovery (e.g., Saxe et al., 2013; Rahaman et al., 2018). Understanding the dynamics of Meta-Learning (e.g., Wang et al., 2016) & the relationship between outer- and inner-loop learning, on the other hand, remains illusive. This paper attempts to address this question.

The author empirically establishes that the meta-learning inner loop undergoes very different dynamics. Instead of sequentially discovering task structures, the meta-learner learns simultaneously about the entire task. This is reminiscent of Bayes-optimal inference & provides evidence for a connection between meta-learning & Empirical Bayes. The outer learning loop thereby corresponds to learning an optimal prior for rapid adaptation during the inner loop. These findings are of importance whenever the actual learning behaviour of a system is of importance (e.g., curriculum learning, safe exploration as well human-in-the-loop applications). Finally, it might help us design learning signals which allow for fast adaptation.

![]() - Ray Interference (Schaul et al., 2019)

- Ray Interference (Schaul et al., 2019)

Ray interference is a phenomenon observed in (multi-objective) Deep RL when learning dynamics travel through a sequence of plateaus. These learning-curve step transitions are associated with a staggered discovery (& unlearning!) of skills and the path is caused by a coupling of learning and data generation arising due to on-policy rollouts, hence an interference. This constrains the agent to learn one thing at a time while parallel learning of individual contexts would be beneficial. The authors derive an analytical relationship to dynamical systems and show a connection to saddle point transitions. The empirical validation is performed on contextual bandits. I would love to know how severe the interference problem is in classical on-policy continuous control tasks. Also, I am personally especially excited about how this might relate to evolutionary methods such as Population-Based Training (PBT). The authors state that PBT may shield against such detrimental on-policy effect. Instead of training a single agent, PBT trains a population with different hyperparameters in parallel. Thereby, an ensemble can generate a diverse of experiences which may overcome plateaus through the diversity of population members.

Category V: Compositionality & Priors

One approach to obtain effective and fast-adapting agents, are informed priors. Instead of learning based on a non-informative knowledge base, the agent can rely upon previously distilled knowledge in the form of a prior distribution But how may one obtain such? The following two papers propose two distinct ways: Simultaneous learning of a goal-agnostic default policy & learning a dense embedding space that is able to represent a large set of expert behaviors.

| Title | Authors | Affiliation | Key Contributions | Link | Notes | |

|---|---|---|---|---|---|---|

| Information asymmetry in KL-regularized RL | Galashov et al. | DeepMind | Re-usable Default Policy Learning | Click | Click | |

| Neural probabilistic motor primitives for humanoid control | Merel et al. | DeepMind | NPMP | Click | Click |

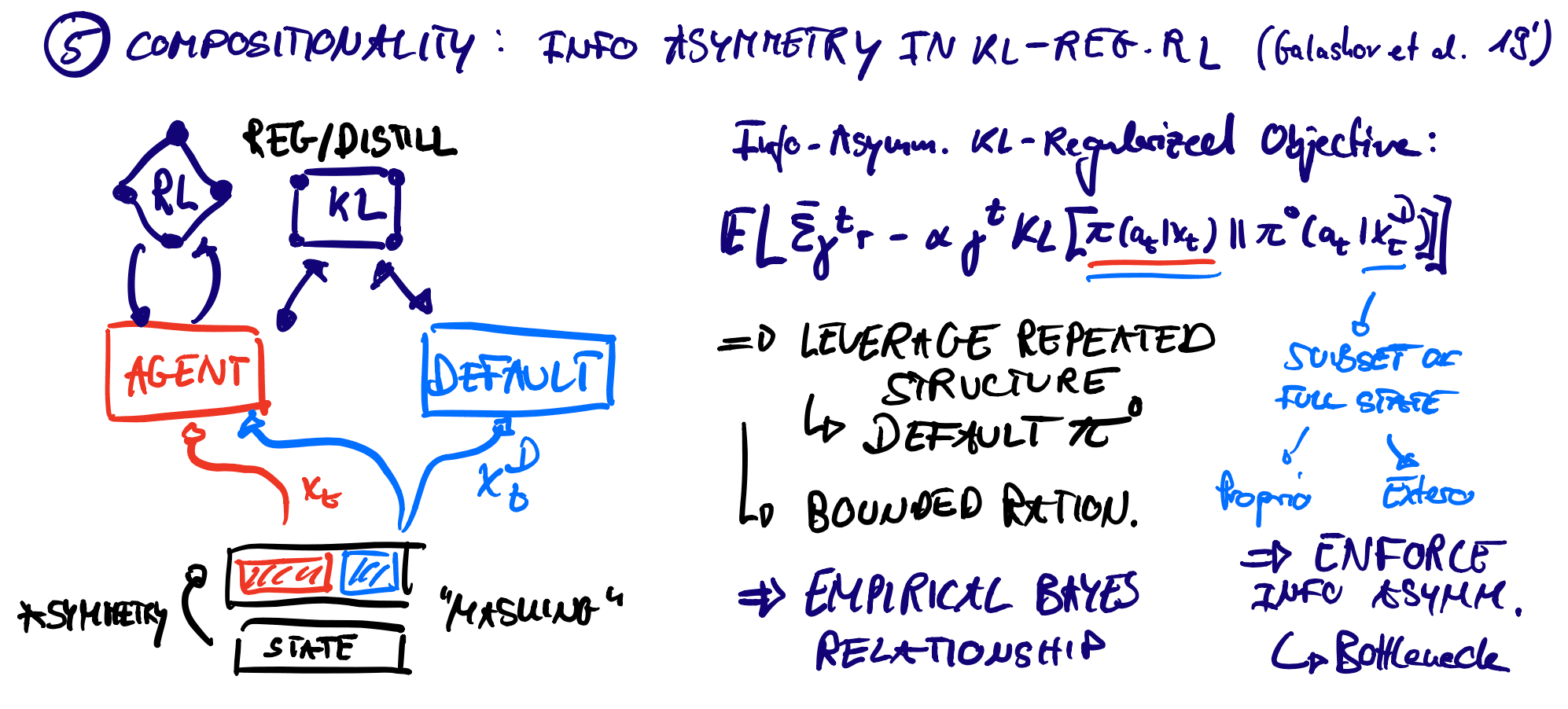

![]() - Information Asymmetry in KL-Regularized RL (Galashov et al., 2019)

- Information Asymmetry in KL-Regularized RL (Galashov et al., 2019)

The authors provide an approach to leverage repeated structure in learning problems. More specifically, the learning of a default policy is enforced by restricting the state information received by the default policy (e.g., extero vs. proprioceptive). The KL-regularized expected reward objective can then be rewritten such that the divergence is computed between the policy of the agent $\pi$ and a default policy $\pi_0$ which receives partial inputs. Optimisation is then performed by alternating between gradient descent updates of $\pi$ (standard KL objective - regularization) and $\pi_0$ (supervised learning given trajectories of $\pi$ - distillation).

In several experiments it is shown that this may lead to reusable behavior is sparse reward environments. Usually, the large action space of DeepMindLab is reduced by a human prior (or bias). The authors show that this can be circumvented by learning a default policy which constrains the action spaces & thereby reduces the complexity of the exploration problem. It can be shown that there exist various connections to information bottleneck ideas as well as learning a generative model using variational EM algorithms.

![]() - NPMP: Neural Probabilistic Motor Primitives (Merel et al., 2019)

- NPMP: Neural Probabilistic Motor Primitives (Merel et al., 2019)

Few-shot learning has been regarded as the crux of intelligence. It requires vast amounts of generalization & we humans do it all the time. A mechanism that might enable such flexibility is the modular reuse of subroutines. In the motor control literature it has therefore been argued for a set of motor primitives/defaults which can be efficiently recomposed & reshaped. In the final paper of todays post, Merel et al. (2019) cast this intuition in the realm of deep probabilistic models. The authors introduce an autoencoder architecture with latent variational bottleneck to distill a large set of expert policies in a latent embedding space. Importantly, the expert policies are not arbitrary pre-trained RL agents, but 2 second snippets of motion capture data. Their main ambition is to extract representations which are able to not only encode key dimensions of behavior but are also easily recalled during execution. The model boils down to an autoregressive latent-variable model of state-conditional action sequences. Given a current history and a small look-ahead snippet, the model has to predict the action that enables such a transition (aka an inverse model). The action can thereby be thought of as a bottleneck between a future trajectory and a past latent state. Given such a powerful ‘motor primitive’ embedding, one still has to obtain the student policy given the expert rollouts. Merel et al. (2019) argue against a behavioral cloning perspective since this often turns out either sample inefficient or non-robust. Instead, they conceptualize the experts as nonlinear feedback controllers around a single nominal trajectory. They then log the Jacobian at every action-state pair and optimize a pertubation objective which resembles a form of denoising autoencoder. Their experiments show that this is able to distill 2707 experts & perform effective one-shot transfer resulting in smooth behaviors.

Conclusions

Conclusions

All in all 2019 has highlighted the immense potential of Deep RL in previously unimagined dimensions. The highlighted large-scale projects remain far from sample efficient. But these problems are being addressed by the current hunt for effective inductive biases, priors & model-based approaches.

I am excited for what there is to come in 2020 & believe that it is an awesome time to be in the field. There are major problems, but the impact that one can have is proportionately great. There is no better time to live than the present.

References

- Vinyals, O., I. Babuschkin, W. M. Czarnecki, M. Mathieu, A. Dudzik, J. Chung, D. H. Choi, et al. (2019): “Grandmaster level in StarCraft II using multi-agent reinforcement learning,” Nature, 575, 350–54.

- Akkaya, I., M. Andrychowicz, M. Chociej, M. Litwin, B. McGrew, A. Petron, A. Paino, et al. (2019): “Solving Rubik’s Cube with a Robot Hand,” arXiv preprint arXiv:1910.07113, .

- Schrittwieser, J., I. Antonoglou, T. Hubert, K. Simonyan, L. Sifre, S. Schmitt, A. Guez, et al. (2019): “Mastering atari, go, chess and shogi by planning with a learned model,” arXiv preprint arXiv:1911.08265, .

- Hafner, D., T. Lillicrap, J. Ba, and M. Norouzi. (2019): “Dream to Control: Learning Behaviors by Latent Imagination,” arXiv preprint arXiv:1912.01603, .

- Jaques, N., A. Lazaridou, E. Hughes, C. Gulcehre, P. Ortega, D. Strouse, J. Z. Leibo, and N. De Freitas. (2019): “Social Influence as Intrinsic Motivation for Multi-Agent Deep Reinforcement Learning,” International Conference on Machine Learning, .

- Baker, B., I. Kanitscheider, T. Markov, Y. Wu, G. Powell, B. McGrew, and I. Mordatch. (2019): “Emergent tool use from multi-agent autocurricula,” arXiv preprint arXiv:1909.07528, .

- Rabinowitz, N. C. (2019): “Meta-learners’ learning dynamics are unlike learners,’” arXiv preprint arXiv:1905.01320, .

- Schaul, T., D. Borsa, J. Modayil, and R. Pascanu. (2019): “Ray Interference: a Source of Plateaus in Deep Reinforcement Learning,” arXiv preprint arXiv:1904.11455, .

- Galashov, A., S. M. Jayakumar, L. Hasenclever, D. Tirumala, J. Schwarz, G. Desjardins, W. M. Czarnecki, Y. W. Teh, R. Pascanu, and N. Heess. (2019): “Information asymmetry in KL-regularized RL,” arXiv preprint arXiv:1905.01240, .

- Merel, J., L. Hasenclever, A. Galashov, A. Ahuja, V. Pham, G. Wayne, Y. W. Teh, and N. Heess. (2018): “Neural probabilistic motor primitives for humanoid control,” arXiv preprint arXiv:1811.11711, .

- Lowe, R., Y. Wu, A. Tamar, J. Harb, O. A. I. P. Abbeel, and I. Mordatch. (2017): “Multi-Agent Actor-Critic for Mixed Cooperative-Competitive Environments,” Advances in Neural Information Processing Systems, .

- Saxe, A. M., J. L. McClelland, and S. Ganguli. (2013): “Exact solutions to the nonlinear dynamics of learning in deep linear neural networks,” arXiv preprint arXiv:1312.6120, .

- Rahaman, N., A. Baratin, D. Arpit, F. Draxler, M. Lin, F. A. Hamprecht, Y. Bengio, and A. Courville. (2018): “On the spectral bias of neural networks,” arXiv preprint arXiv:1806.08734, .

- Wang, J. X., Z. Kurth-Nelson, D. Tirumala, H. Soyer, J. Z. Leibo, R. Munos, C. Blundell, D. Kumaran, and M. Botvinick. “Learning to reinforcement learn, 2016,” arXiv preprint arXiv:1611.05763, .