📝 ‘EvoLLM’ & ‘EvoTransformer’ Papers Are Out 🎉

Date:

Stoked to share that two projects I worked on during my Google DeepMind student researcher time in Tokyo 🗼 are now available on arXiv! We explore the capabilities of Transformers for Evolutionary Optimization.

More specifically, our first work, EvoLLM 💬, shows that LLMs, which were purely trained on text can be used as powerful recombination operators for Evolution Strategies. You can find the paper here.

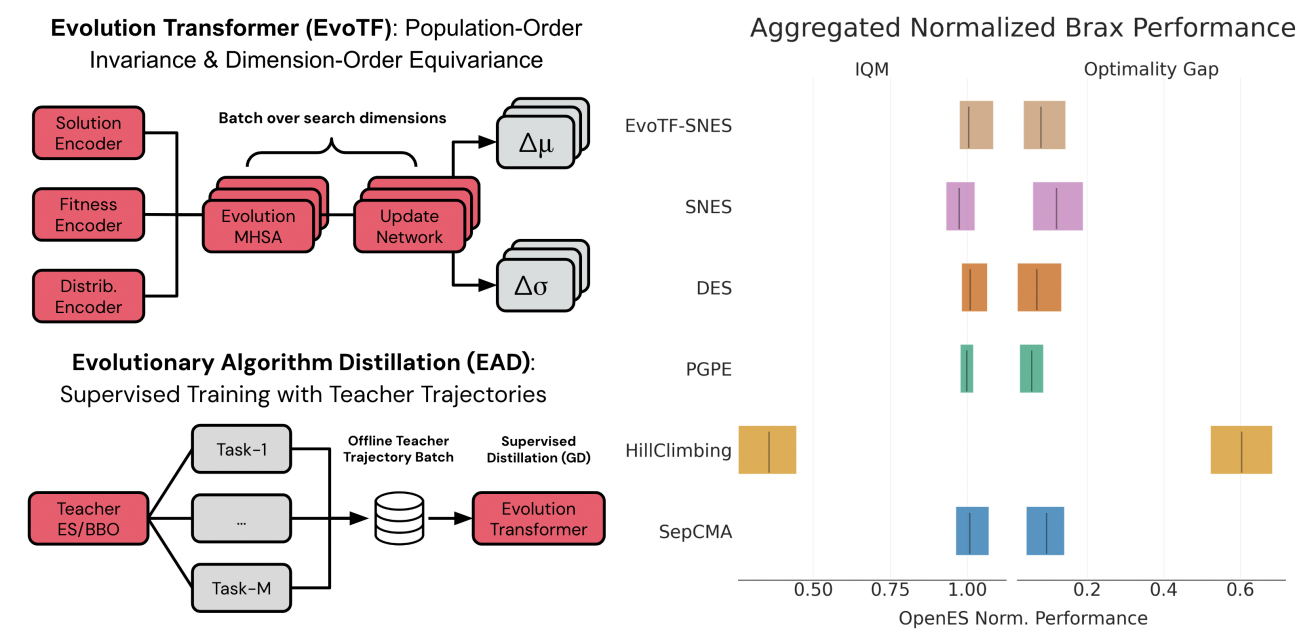

Furthermore, our second work, Evolution Transformer 🤖, uses supervised pre-training of Transformers to act like Evolution Strategies using Algorithm Distillation of teachers. We explore fine-tuning using meta-evolution and outline a strategy to train the Transformer in a fully self-referential fashion. You can find the paper here.