On “On the Measure of Intelligence” by F. Chollet (2019)

Published:

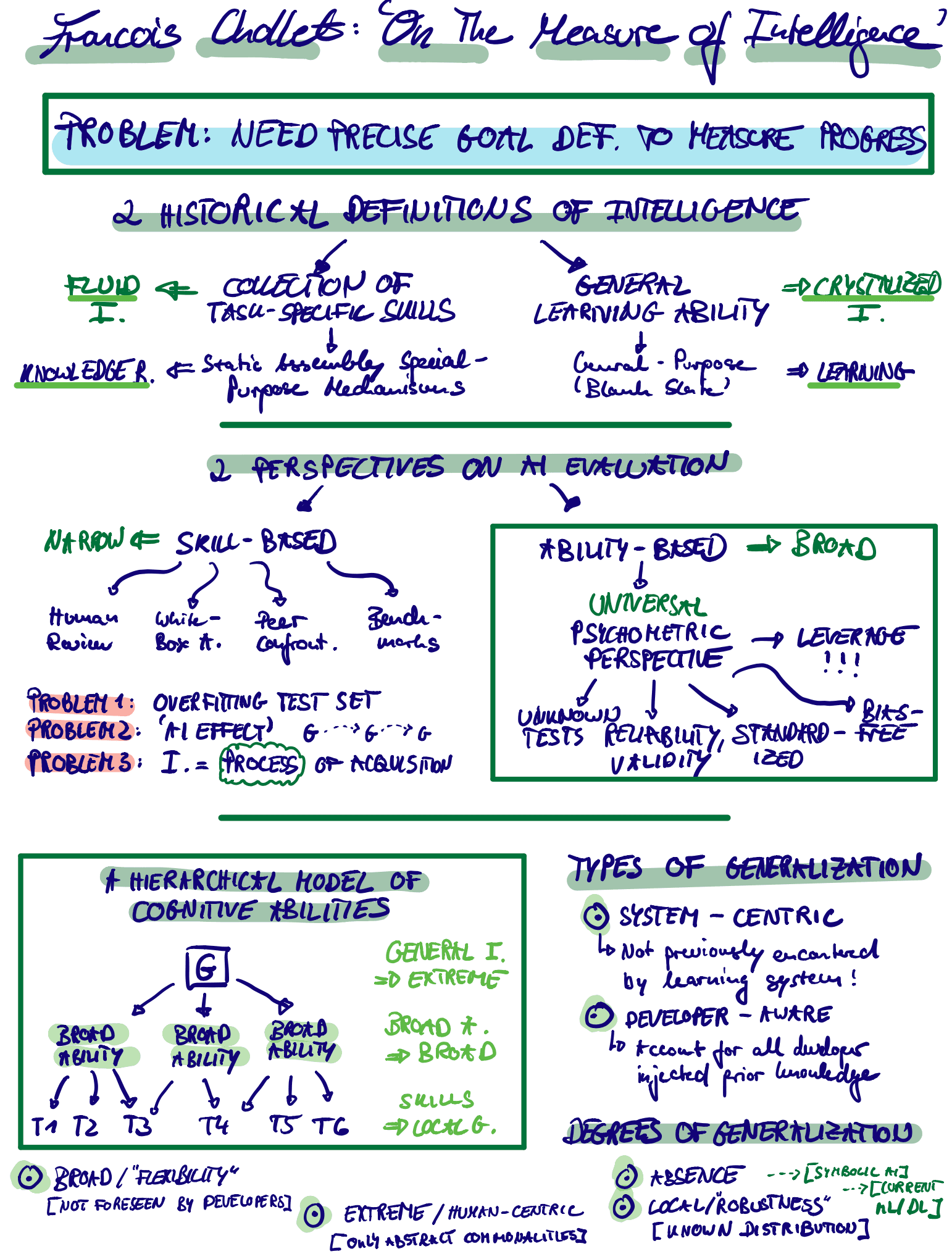

Last week Kaggle announced a new challenge. A challenge that is different - in many ways. It is based on the Abstraction and Reasoning Corpus & accompanied by a recent paper by Francois Chollet. In this work Chollet highlights several short-comings of the current AI research agenda & argues for a psychometric & ability-based assessment of intelligent systems, which allows for standardized & reliable comparison. More importantly it introduces an actionable definition of human-like general intelligence which is grounded in the notions of priors, experience and generalization difficulty. This definition may be used by the researh community to measure progress and to collectively work on a shared goal. After reading the paper, discussing it in our reading group (You can checkout Heiner Spiess’presentation here) as well as re-reading my mind is still sorting the intellectual content. So stick with me & let me give you an opinionated summary of a 64 page gold mine. I also explored the Abstraction & Reasoning Corpus Benchmark a little bit, so stick around if you want to get started with tackling intelligence!

The problem: Finding a precise definition of intelligence

Progress in Machine Learning research is most definitely not about hunting the next sexy skill or game. But it often times feels like it. DeepBlue, AlphaGo, OpenAI’s Five, AlphaStar are all examples of massive PR campaigns which only shortly after their “success”-ful announcement have been criticized for their lack of generalization. Every time the “skill ball” is pushed further… to the next challenging game (maybe Hanabi for some theory of mind cooperative multi-agent learning?). But where do we end up? A separate algorithm that solves each game does not necessarily generalize to broader human-like challenges. Games are only an artificial subset and not representative of the full spectrum of our species’ ecological niche (which allows for fast data generation). In summary: Intelligence is more than a collection of learned skills. Therefore, the AI research community has to settle on a shared goal - a unanimous goal definition of intelligence.

Progress in Machine Learning research is most definitely not about hunting the next sexy skill or game. But it often times feels like it. DeepBlue, AlphaGo, OpenAI’s Five, AlphaStar are all examples of massive PR campaigns which only shortly after their “success”-ful announcement have been criticized for their lack of generalization. Every time the “skill ball” is pushed further… to the next challenging game (maybe Hanabi for some theory of mind cooperative multi-agent learning?). But where do we end up? A separate algorithm that solves each game does not necessarily generalize to broader human-like challenges. Games are only an artificial subset and not representative of the full spectrum of our species’ ecological niche (which allows for fast data generation). In summary: Intelligence is more than a collection of learned skills. Therefore, the AI research community has to settle on a shared goal - a unanimous goal definition of intelligence.

Historically, there have been many attempts to measure intelligence - with more or less overlap. Chollet’s paper starts out by reviewing two main pillars: skill-based vs. ability-based assessment of intelligence. He argues that while skills are the crucial output of an intelligent system, they are only that - an artifact. Abilities, on the other hand, allow to enlarge the space of possible skill-outputs. By the magic of learning (e.g. evolutionary/gradient-based, plasticity-based, neural dynamics-based) an intelligent system is capable to combine previous knowledge and experience with a new situation. This adaptive & task-oriented and very much conditional process might ultimately by the crux of intelligence.

To me it appears fairly obvious that learning & the related emergence of niche-specific inductive biases (such as our bodies & nervous systems) must be the core of intelligence. It bridges all timescales and very much resonates with ideas promoted during NeurIPS 2019 by Jeff Clune, David Ha as well as Blaise Aguera. Our machine learning benchmark should also reflect this high-level objective. This is not to say that there are already quite a few attempts out there, including the following:

- Meta Learning: Instead of optimizing the performance of an agent being trained on 200 million of ATARI game frames, we should aim for rapid adaptation and flexibility in what skills are required to solve a specific task. Meta Learning explicitly formulates fast adaptation as an outer loop objective, while the inner loop is restricted to learn within few updates of SGD or unrolling the recurrent dynamics.

- Relational Deep Learning & Graph Neural Networks: Relational methods combine deep learning with traditional methods revolving around propositional logic. Thereby, one can “reason” about how representations compare to each other and acquire an interpretable set of attention heads. The hope is that such inferred/learned relational representations transfer easily across tasks.

- Curriculum Learning: Another approach is to set up an efficient sequence of tasks which allow a learning agent to smoothly travel across changing loss surfaces. Doing so requires an overlap between tasks such that transfer of skills makes sense. Intuitively, this is very much like our acquisition of skills by being progressively challenged.

Still, the majority of the community works on comparable niche problems that diffuse far away from generalization and human-like general intelligence. So what can we do about this?

Towards a psychometric account of intelligence

The AI & ML communities have been dreaming of a notion of universal intelligence that is unprecedented. Human cognition and the brain provide a natural first starting point. But discoveries in neuroscience appear slow/ambiguous and it is not often obvious how to translate low-level cellular inspiration into algorithmic version of inductive biases. Psychology and psychometrics - the discipline of psychological measurement (according to Wikipedia ![]() ) - on the other hand, provide insights on a different level of analysis. Chollet proposes to no longer neglect the insights coming from the community that has developed human intelligence test for decades.

) - on the other hand, provide insights on a different level of analysis. Chollet proposes to no longer neglect the insights coming from the community that has developed human intelligence test for decades.

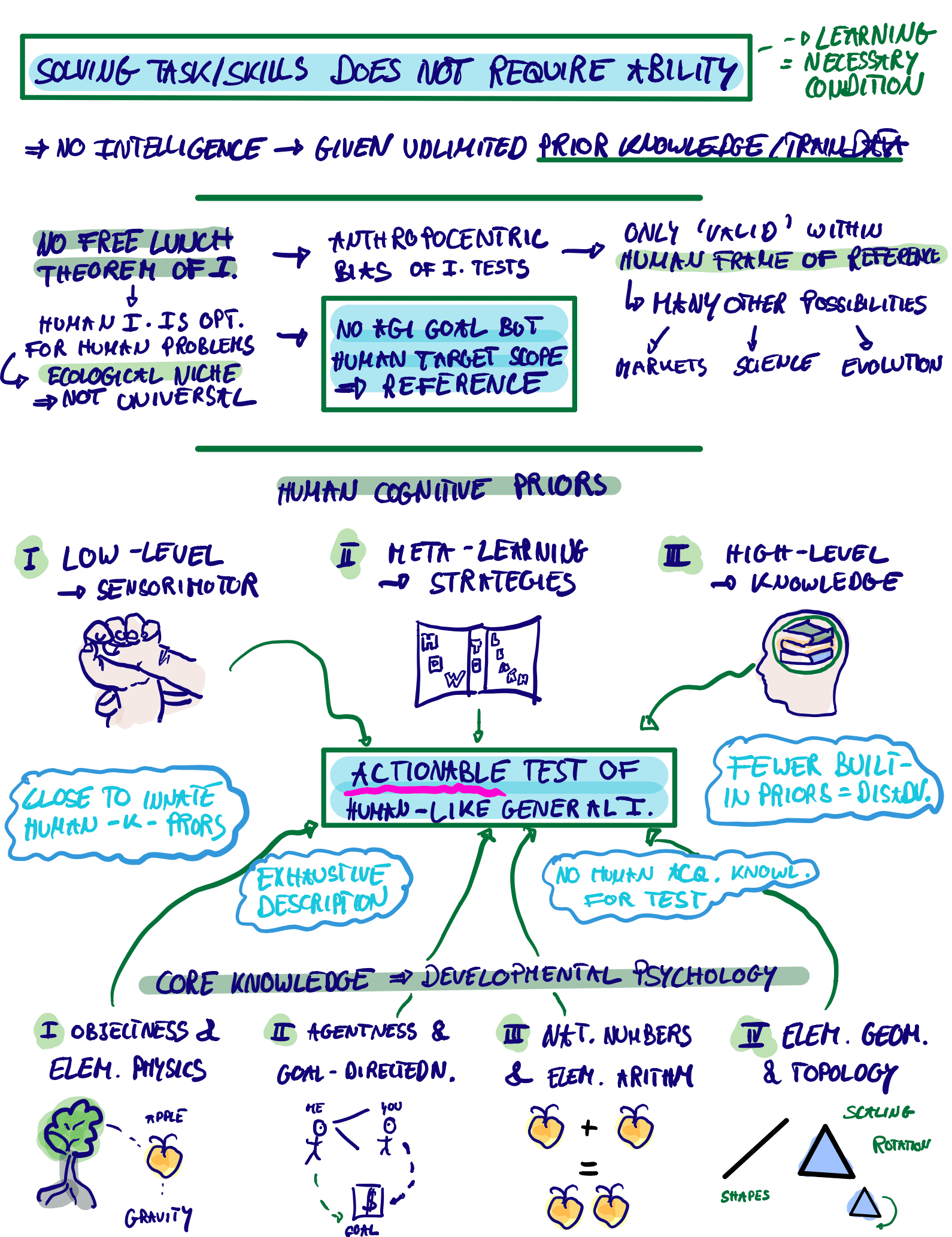

One of his main argument is against a universal g-factor. Instead all known intelligent systems are conditioned on their environment and task-specifications. Human intelligence is optimized for human problems. And thereby worse at solving problems that may be encountered at Mars. This is the so-called ‘No Free Lunch Theorem of Intelligence’. Hence, also all our definitions of intelligence are only valid within a human frame of reference. We should not neglect an anthropomorphic but actionable assessment of intelligence, but instead embrace it. A psychometric perspective can allow us to be explicit about our developer-biases and the prior knowledge we build into our artificial systems. Chollet proposes to develop human-centered tests of intelligence which incorporate human cognitive priors & the developmental psychology notion of core knowledge. These include different levels of description (e.g. low-level sensorimotor reflexes, the ability to learning how to learn as well as high-level knowledge) as well as innate capabilities (e.g. elementary geometry, arithmetics, physics & agency). The actionable definition of intelligence thereby becomes the following:

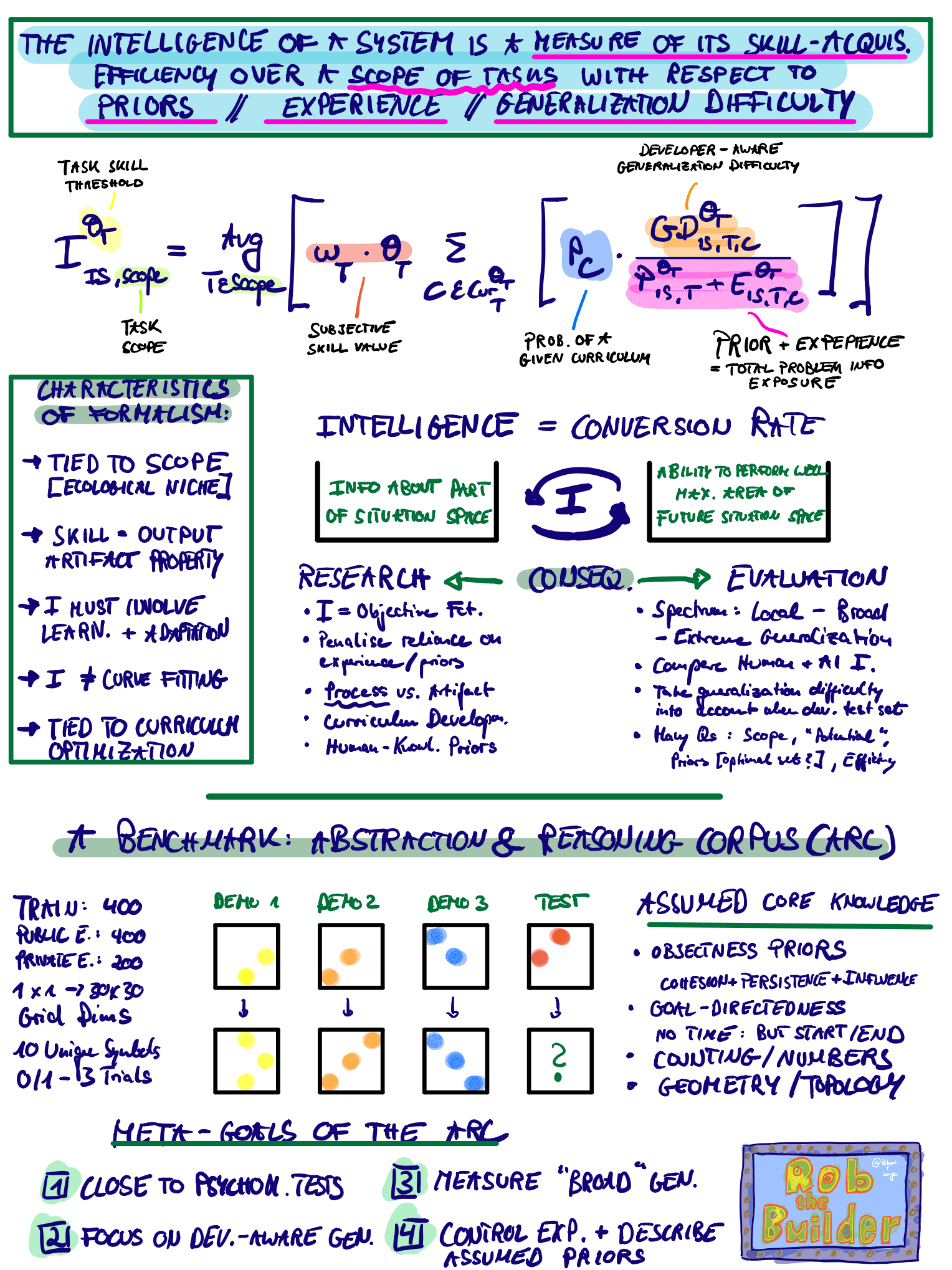

“The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks with respect to priors, experience, and generalization difficulty.” - Chollet (2019; p.27)

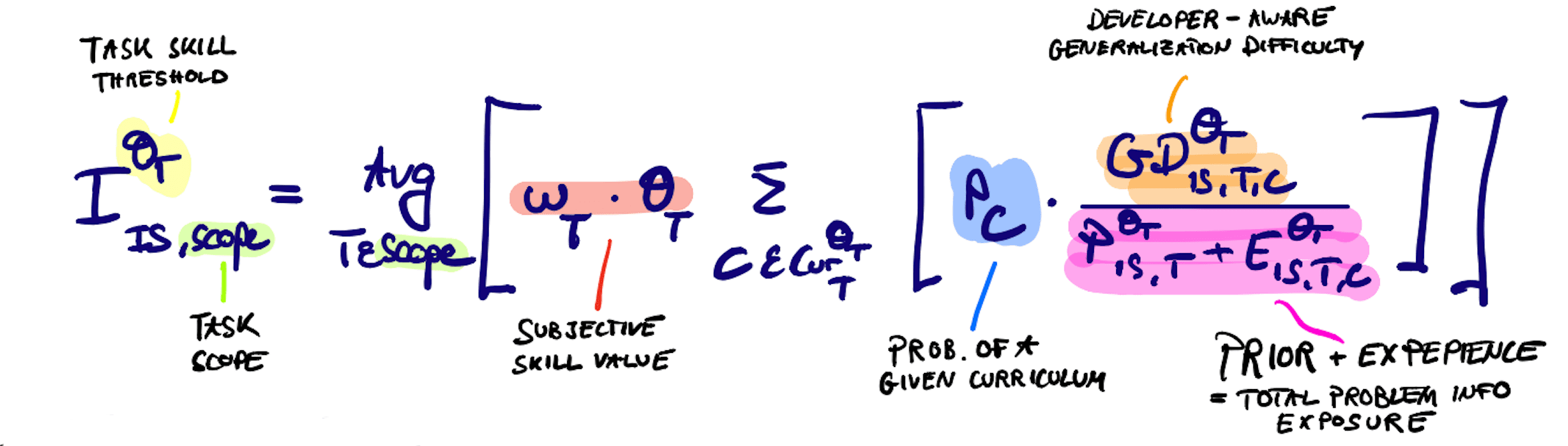

Additionally, Chollet provides a formal algorithmic information theory-based measure of the intelligence of an artificial system:

And in words: The measure of intelligence may be interpreted as a conversion rate between a current state of information and the ability to perform well under an uncertain future. It accounts for generalization difficulty of a task, prior knowledge and experience & allows for subjective weighting of task as well as the subjective construction of tasks we care about. The measure is tied to the presented task scope (ecological niche), treats skills as mere output artifact properties and is grounded in an curriculum optimization. On a high-level this measure might be used to definition a top-down optimization objective. Which would allow to apply some chain rule/automatic differentiation magic (if life was smoothly differentiable) and most importantly to quantify progress. It penalizes the amount of prior knowledge as well as experience and thereby follows the principles outlined above.

The obvious next question becomes: So how can we actually act upon such a measurement?

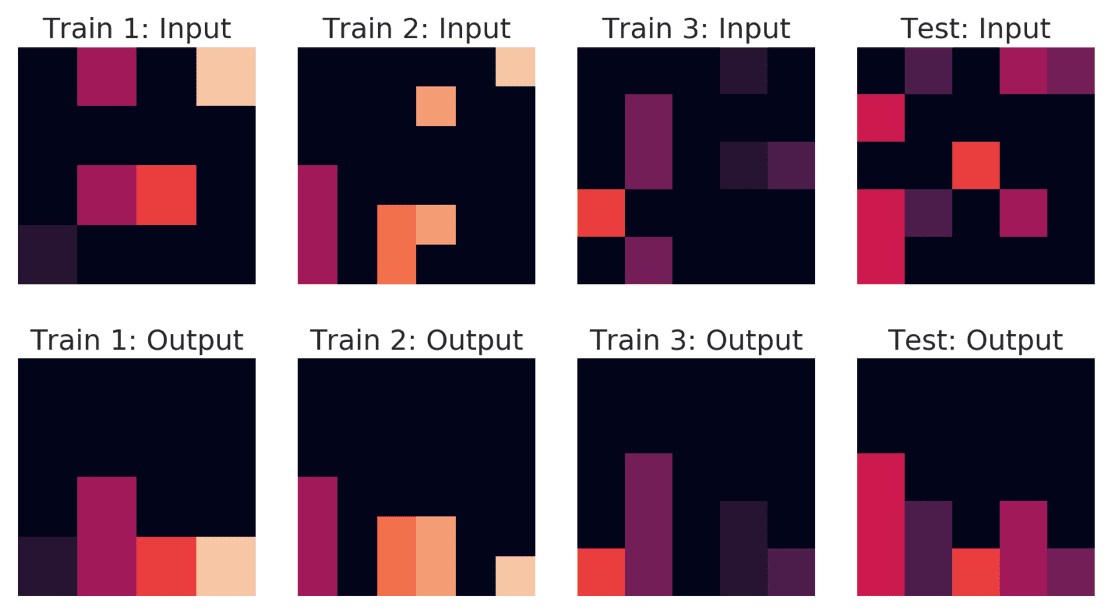

A New Benchmark: The Abstraction and Reasoning Corpus (ARC)

The Abstraction and Reasoning Corpus addresses this question by introducing a novel benchmark that aims to assess and provide a reproducible test for artificial intelligence. It is reminiscent of the classical Raven’s progressive matrices and can even for humans from time to time be quite tricky. Each task (see example above) provides the system with a set of example input-output pairs and queries an output for a test input. The system is allowed to submit up to 3 solutions and receives a binary reward signal (True/False). The specific solution for the task above requires an approximate understanding of the concept of gravity. The output simply “drops” the objects down to the bottom of the image array. But this is only a single solution example. The benchmark is a lot broader and requires different notions of core knowledge. The overall dataset consists of 400 train tasks, 400 evaluation tasks and another 200 hold-out test tasks. Quite exciting, right?

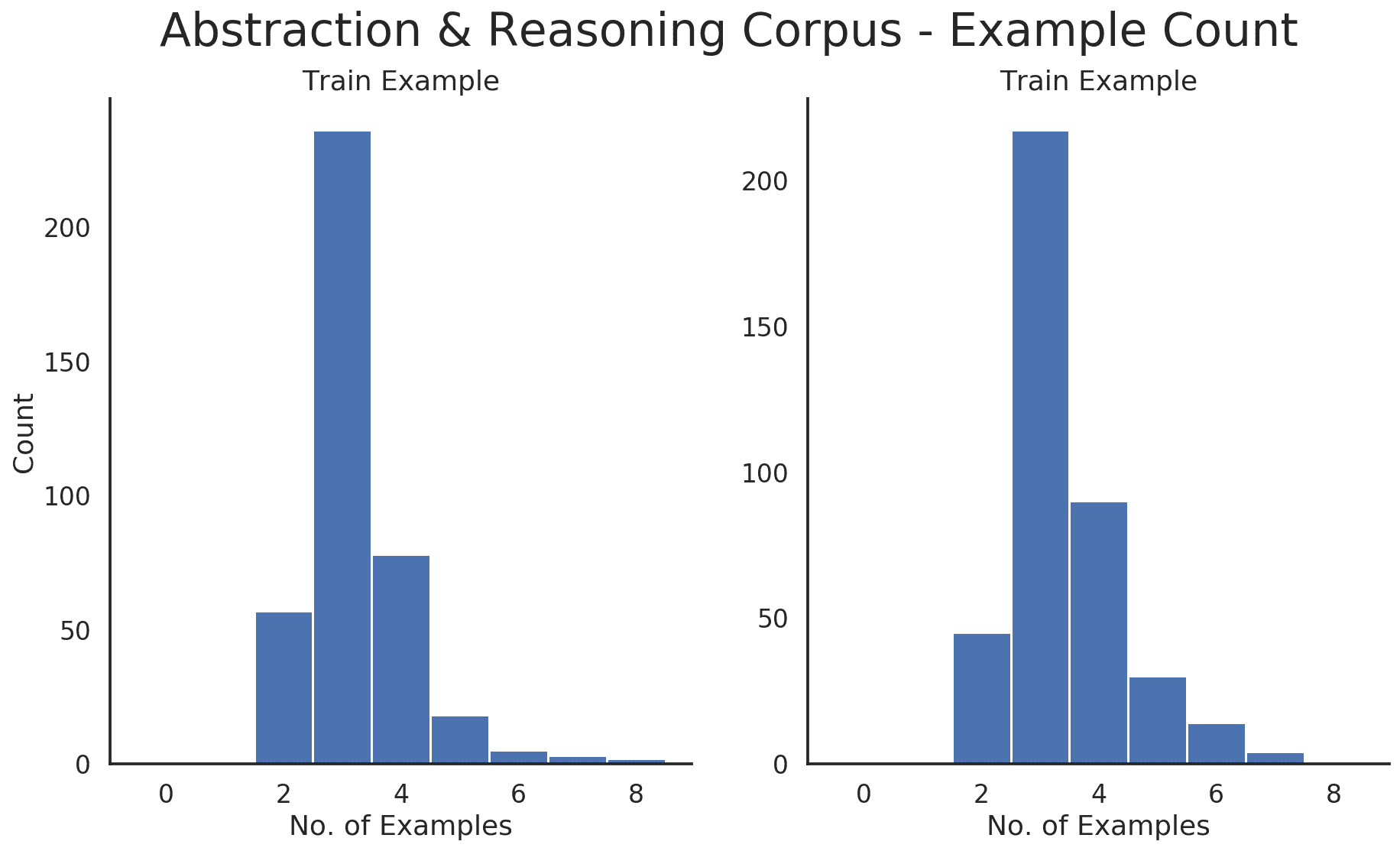

During the last couple of days I have been getting my hands a little dirty with the benchmark. There are several fundamental problems when trying to use plug-and-play Deep (Reinforcement) Learning. Initially the problem lies in the irregular input/output shapes. If you train your favorite MNIST-CNN both input and output layer shapes are fixed (i.e. 32x32 and 10 output digit labels). The ARC benchmark is not regular in that sense. Sometimes there are two examples with varying shape & the output query does have a different one. Thereby, it becomes impossible to train a network that has a single input/output layer. Furthermore, the number of examples varies (see figure below) and it is unclear how to leverage the 3 attempts. My initial ideas revolve around doing a form of k-fold cross-validation training on the given examples and trying to leverage ideas from the Relational DL community (e.g. PrediNet). Train on two and test on the final example. Only after convergence & zero cross-validation error, we proceed to the actual test case. The main problem: Online training on each example. This can become quite compute intense.

A possible way to leverage the try possible attempts might be in the form of a meta-reinforcement learning objective. This could allow for rapid adaptation. Hence, we would optimize for performance within 3 closed-loop interactions with the oracle. This could also be done by training a RL^2 LSTM that receives the previous attempts feedback as an input. Here are a few futher challenge insights:

- There has to be task-specific training involved for adaptation or program synthesis. It is not enough to simply hard-code all priors into a simple feedforward network.

- When we as humans look at the input-output pairs we immediately figure out the right priors to tackle the test examples on the fly. This includes the color palette that defines the solution space. If the train output has the three types of unique numerical pixel values, the test output is unlikely to have 20.

- I realized that when I attempt to solve one of the tasks, I do a lot of cross-task inference. Double checking hypothesis and performing a model-based cross-validation. Framing inference as repeated hypothesis testing might be a cool idea.

- Minimizing a pixel-wise MSE loss is fundamentally limited. Since there is no half-wrong in the evaluation of a solution even small MSE loss will lead to a wrong output.

- Core knowledge is hard to encode. Relational deep learning and geometric deep learning provide promising perspectives but are still in their infant stages. We are far from being able to emulate evolution with meta-learning.

- The goal of trying to solve everything is too ambitious (for now).

Chollet himself proposes to dive into a field called Program Synthesis. Intuitively, this requires you to generate programs to solve some of the tasks yourself and then on a higher-level to learn such program generation.

If you're not sure where to start on the ARC competition, here's my advice.

— François Chollet (@fchollet) February 13, 2020

It's a very difficult challenge, but I strongly believe (with some evidence) that someone smart & motivated can develop in a few weeks an approach that solves ~5-10% of the tasks in the hidden test set. pic.twitter.com/1hvZrXugnt

Still all of these ideas leave the question for which inductive biases to use somewhat open: Convolutions for visual processing, attention for set operations, RNN for memory and combating occlusions/object permanence? I have drafted a small notebook for everyone interested in getting started with the benchmark. You can find it here. And here you can find a kaggle kernel that provides “manual” solution programs to 10 tasks from the ARC.

Some concluding thoughts

I really love this quote by Geoffrey Hinton:

“The future depends on some graduate student who is deeply suspicious of everything I have said.”

It is an expression of severe doubt even though backpropagation and major Deep Learning breakthroughs are everywhere. My experience during the last days has been extremely humbling and put many things into perspective. I like to be hyped by recent progress but there are also huge challenges in front of the ML community. Deep Learning in its current form is most definitely not the holy grail of intelligence. It lacks flexibility, efficiency and out-of-distribution performance. Intelligent systems have a long way to go. And the ARC benchmark provides a great path. So let’s get started. ![]()

P.S.: The challenge runs for 3 months.