EEML 2019 - A (Deep) Week in Bucharest!

Published:

In January I was considering where to go with my scientific future. Struggling whether to stay in Berlin or to go back to London, I got frustrated with my technical progress. At NeuRIPS I encountered so much amazing work and I felt like there was too much to learn until reaching the cutting edge. I was stuck. And then my former Imperial supervisor forwarded me an email advertising this new Eastern European Machine Learning (EEML) summer school.

I looked at the lineup of speakers and directly new - Bucharest I am coming! It included prominent figures such as Doina Precup (“Mrs. Hierarchical RL”), Andrew Zisserman aka AZ, Nal Kalchbrenner (“Let’s scale things with conditional independence”) and so many more. I was sold and immediately started applying. For anyone who wants to know how 10 days of Machine Learning madness turned out, this post is written for you!

Note: Credit for almost all pictures used in this post goes to Stefan Cobeli who was so nice to document and share.

Note: Credit for almost all pictures used in this post goes to Stefan Cobeli who was so nice to document and share.

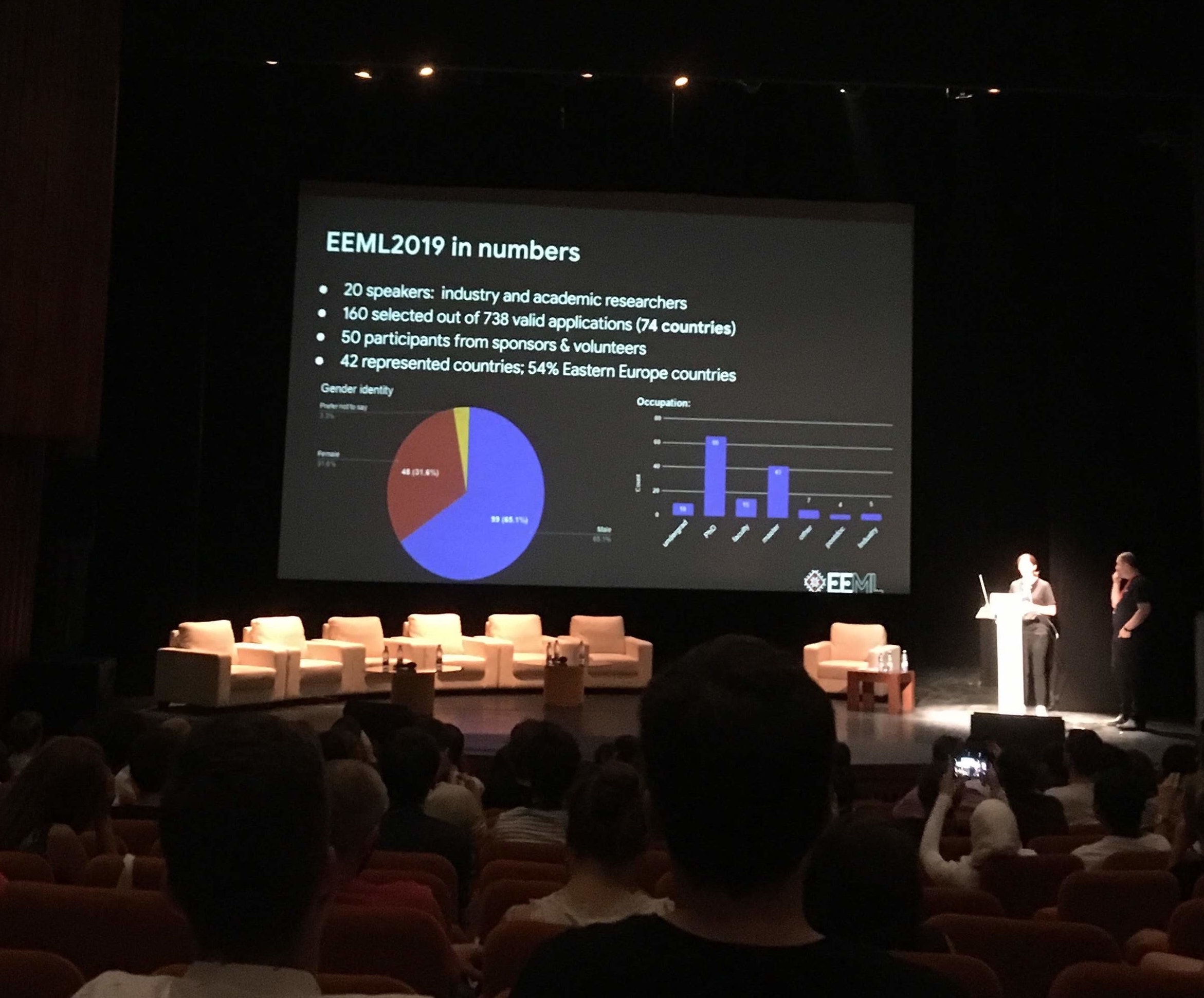

Diversity & Exchange = Key to a better world

Tech education is not fairly distributed among countries, affiliation and genders. By a long shot - and that is simply wrong. EEML aims to improve on that by giving back to the Eastern European community and promoting international ML research within the region. The annual summer school is located in an Eastern European country, organized by Eastern Europeans and taught by prominent lecturers from all over the world!

Compared to other summer schools, EEML’s application process was quite involved. It included all the standards (CV, motivational statement, etc.) but also required a two page extended abstract of your current work. If selected, one would present a corresponding poster in one of two dedicated sessions. I put quite some effort into outlining the recent state of my current Swarm Multi-Agent RL project. And then it happened… I got rejected! After sadly complaining to all my lab members, I decided to read the footnotes. An additional 5 EEML spots would be provided to the best submissions to the co-located RAAI conference. I revised my extended abstract and that is how I still ended up having a blast in Bucharest.

The program included a series of talks ranging from deep learning and reinforcement learning theory (introduction, Multi-Agent as well as Inverse RL) to more applied Machine Vision/NLP as well as medical applications. You can get a concise overview here. All major topics were supported by computer lab sessions. In these we got to implement many of the previously introduced algorithms in Tensorflow. For me as a PyTorch person this was of immense value. Especially being supported by experienced TAs allowed me to diffuse and translate very quickly. Finally, there was tons of time to get to know the other participants as well as talk to all of the speakers. The pure amount of organizational efforts and financial costs of all the catering, gala dinner as well as excursion was sheer amazing. Here are a few of my personal highlights:

Experience Highlights.

Disclaimer: I am still buzzing after a week of consolidation. I couldn’t thank or assign credit to everyone who contributed to this experience. Here is a brief selection to anyone interested in applying to the next generation of EEML!

![]() The wonderful people I got to meet.

The wonderful people I got to meet.

By far the most valuable part of the summer school were all the inspiring people that I got to meet. It is fair to say that the selection of participants was outstanding. I got to explore and exploit a lot from the diverse cultural as well as technical background of the other co-schoolers. Furthermore, the size of the event (~170 participants) made it possible to get to know all the speakers and to truly discuss subject matters.

A special shoutout goes to Vu Nguyen, Jeroen Berrevoets, Marcel Ackermann as well as Florin Gogianu for a set of outstanding discussions and hopefully some great future collaborations.

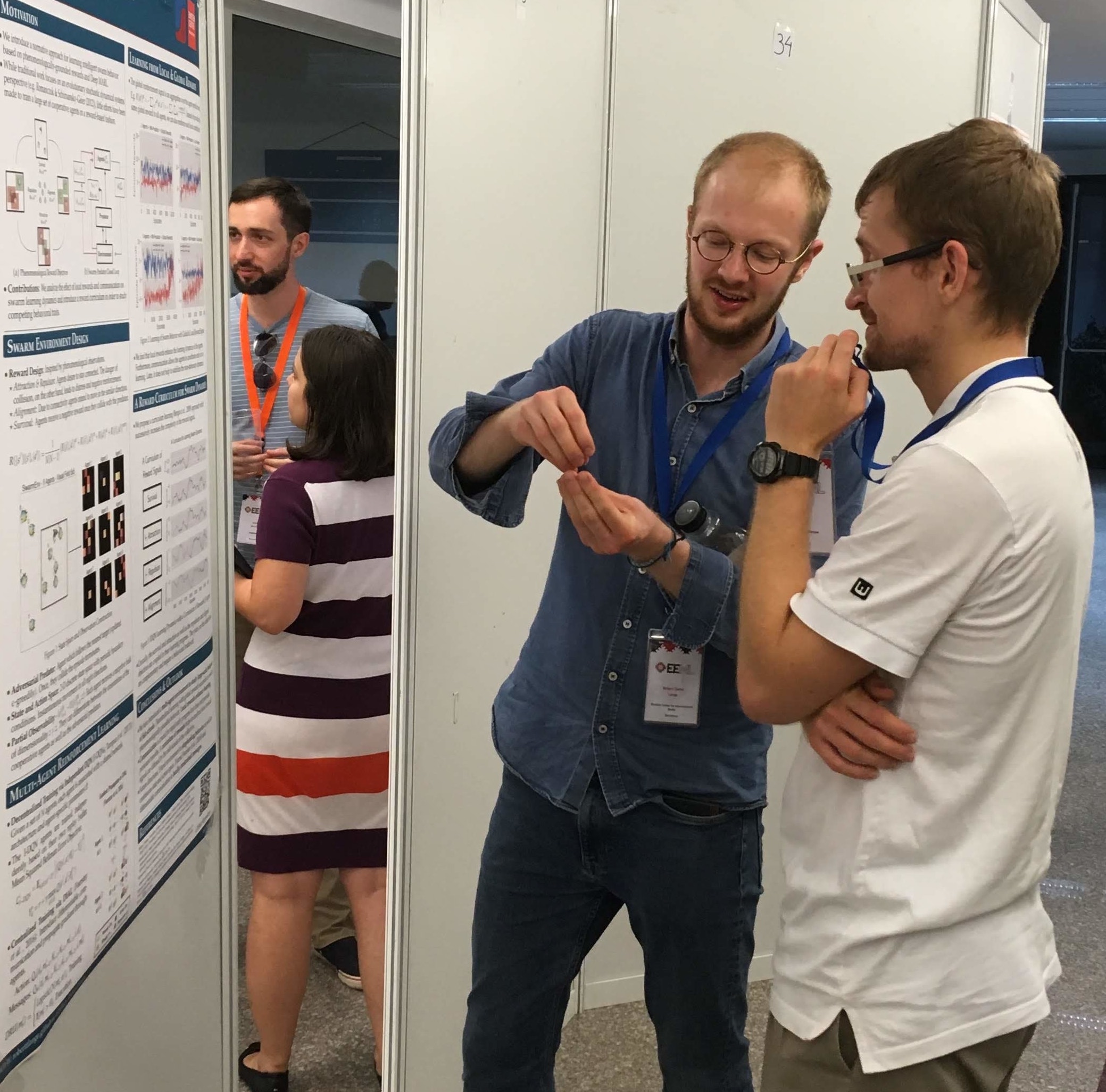

![]() The poster sessions.

The poster sessions.

On Tuesday and Wednesday evenings all participants got to present their work to the fellow participants as well as speakers. Both sessions went for around 3 hours and most presenters stayed even longer to discuss research directions as well as just mingle. Here are some impressions:

Personally, I think that soft forcing all participants to present is a great idea. I really got to know a ton of different current research directions and got a lot of inspiration for my own work. These include some really cool work by Florin Gogianu on enhancing experience replay by sampling proportional to a measure of Bayesian surprise as well as some novel model/VAE-based trajectory simulation by Błażej Osiński and many more. You can checkout my own poster here!

![]() The unconference.

The unconference.

During the final day all participants formed small groups to discuss a diverse set of topics. These ranged from Bayesian Learning to Ethics in AI. I got to participate in a vivid discussion centered around the role of inductive biases and neuroscientific inspiration in current Machine Learning research.

We discussed how the visual cortex shapes our understanding of CNNs, the dopamine reward prediction error hypothesis and many other success stories of neuroscience in ML. The biggest point of discussion revolved around whether or not machine intelligence should strive to be similar to our understanding of mechanisms in the brain. Or is mammalian intelligence itself only a local optimum on our trajectory to AGI?

![]() Beautiful Bucharest - and so much more.

Beautiful Bucharest - and so much more.

Well to be completely honest there was not that much time left to explore the city. Still the EEML organizer tried to have us explore as much Romanian culture as possible. We had a lovely tour through the palace of parliament, lunch in one of the oldest restaurant (build in the 15th century!) as well as a delicious gala dinner with traditional music and dance.

Technical Highlights (just a few).

The intellectual experience and content itself was incredible. Honestly, I have never been exposed to so much state-of-the-art material at a summer school. And I have sampled a few. Again, there were so many extraordinary talks I could talk about, but I will highlight just a few observations that really stuck with me.

![]() Self-attention is everywhere.

Self-attention is everywhere.

A key element throughout many lectures was the problem of RNNs being hard to scale. Their sequential nature and parallelization at Google-style scale are somewhat at odds. Attention and masked convolutions on the other hand provide an efficient and flexible paradigm which is utilized in the success story of autoregressive models such as WaveNet and PixelCNNs. Furthermore, transformers and multi-head attention are everywhere in modern NLP such as contextual pre-trained embeddings (BERT, ELMO, etc.). By masking (random) parts of the sequence and predicting it from the context, the architecture learns structure beyond context-independent latent representations. This is very similar to the notion of dropout and robustifying predictions in a distributed fashion. Especially Antoine Bordes’, Razvan Pascanu’s and Nal Kalchbrenner’s talks highlighted the immense success story of self-attention in the last 18 months since the original “Attention is all you need” paper. This also made me realize that some large-scale experiments can hardly be done by a CS PhD having to share 4 GPUs with other lab members. Ultimately, the role of fundamental research in Machine Learning has to be redefined. And maybe university research should focus more on provable guarantees such as concentration inequalities while industry research focuses on architectures and applications.

![]() Self-supervised learning is the future.

Self-supervised learning is the future.

Two of the most empirically impressive talks were given by Andrew Zisserman and Joao Carreira (previously at the RAAI conference). Both presented current work on using self-supervised proxy tasks together with large scale data/compute in order to pre-train architectures. These included color prediction from greyscale images, order permutation tasks from a set of cropped patches as well as “shuffle & learn” tasks on complete videos frames. The network learns relevant parts of the data-generating process such as contours and temporal correlation which is universal to core vision. Afterwards, one only requires very few labelled samples in order to perform k-shot learning on more complicated tasks such as object recognition on ImageNet. In one of the breaks I had a brief conversation with AZ on how this might relate to meta-learning and more specifically Chelsea Finn’s MAML (![]() ) concept of optimizing for weight initialization. By training on a set of sampled tasks, the weights are nudged towards a most “agile” state from which transfer learning is easy (given overlap in the task distributions). I found it really nice to think of self-supervised learning as a form of slow time-scale evolution in which a set of useful inductive biases might be discovered. During the meta-test phase the network is tuned on a fast time-scale to the task at hand.

) concept of optimizing for weight initialization. By training on a set of sampled tasks, the weights are nudged towards a most “agile” state from which transfer learning is easy (given overlap in the task distributions). I found it really nice to think of self-supervised learning as a form of slow time-scale evolution in which a set of useful inductive biases might be discovered. During the meta-test phase the network is tuned on a fast time-scale to the task at hand.

![]() {???} Learning $\to$ {!!!} Learning.

{???} Learning $\to$ {!!!} Learning.

Nowadays it is kind of hard to stay up-to-date with all the different paradigms of learning. Lifelong, Incremental, Never ending, Continual. Well it turns out they all fall under the same hood. In an amazing review talk Tinne Tuytelaars gave us a comprehensive overview of the differences between the zoo of supervised learning types involving more than one task. More specifically, continual learning involves learning a stream of sequential tasks whereby no previous data is stored. This allows to reduce the memory footprint and introduces the challenge of catastrophic forgetting. Tinne covered a range of approaches including regularization-based, rehearsal-based as well as architecture-based approaches. Striking to me was that most approaches enforce the emergence of task-specific sub-networks within one architecture. Some of them do so in a fairly soft fashion (using a form of distillation loss as regularizer) while others do so more explicitly by pruning and freezing parts of the network dedicated to certain tasks. Some of them seem fairly ad hoc but I was happy to see some work of prominent computational neuroscientists highlighted (e.g. Claudia Clopath and Friedemann Zenke). All in all a great talk and knowledge diffusion (… and hopefully no catastrophic forgetting ![]() )!

)!

Thank you very much @EEML Organizers!

Learning is about transitioning topics from the space of unknown unknowns into the realm of the known unknowns. Only afterwards and with many hours of hard work one can finally become truly master a topic. And EEML definitely delivered on all fronts. A massive thank you goes out to all the organizers, the super helpful volunteers as well as TAs. This was a wonderful week!

To all those who did not get the chance this year: Simply apply! You won’t regret it. Checkout my handwritten summaries of summer school lectures to get a better impression:

- Days 1 & 2: Including talks by Razvan Pascanu, Doina Precup & Shimon Whiteson.

- Days 3 & 4: Including talks by Tinne Tuytelaars, Andrew Zisserman & Anca Dragan.

- Days 5 & 6: Including talks by Razvan Pascanu, Antoine Bordes & Nal Kalchbrenner.

I am privileged to have been selected. During the course of the last year my mantra has become to give back as much as I can and appreciate my responsibility. So please get in touch if you want to know more about EEML, the application process or being a ML PhD in Berlin.

In any case - keep on learning and evolving!

Love, Rob